Mark Zuckerberg famously said, “Move fast and break things. Unless you are breaking stuff, you are not moving fast enough.”

Since then, startups and growth marketers have latched onto the statement. “Move fast and break things” has become a way of life, an ideal for aspiring entrepreneurs who just want to hustle all day, hustle all night like Gary Vaynerchuk.

But how true is that statement, which Mark made many, many years ago?

Does it apply to testing and experimentation? The philosophy of high velocity testing, made popular by a number of different testing and growth experts, certainly makes the case that it does.

Table of contents

What Is High Velocity Testing?

High velocity testing, also known as high tempo testing, is the philosophy that rapid testing and experimentation is the key to major growth. Seems pretty simple, right? To speed up growth, test more things faster.

Sean Ellis of GrowthHackers.com, who has championed the philosophy in recent months, explains…

Sean Ellis, GrowthHackers.com:

“The more tests you run, the more you learn about how to grow your business. So it’s only natural to want to run as many tests per period of time as possible.” (via GrowthHackers.com)

In practice, however, the philosophy is more complicated. For starters, our 2016 State of the Industry Report found that most respondents run fewer than 5 tests a month. 43% only run 1-2 tests per month. Not exactly high tempo.

In fact, only 5% of respondents are running 21+ tests a month, which is roughly 5 tests a week.

Here are just a few other reasons that high velocity testing is easier said than done…

- Optimization budgets are restrictive, resulting in small team sizes and less prioritization.

- Optimization is no small task, making most optimizers very busy people.

- Many optimizers are new to the industry (nearly 20% of all respondents have been working in their CRO role for less than a year) and still learning.

But just because something is difficult doesn’t mean it’s not worth pursuing.

Why Is High Velocity Testing Important?

In recent history, you’ve seen the impact of high velocity testing multiple times. Think of the last company that seemingly appeared out of nowhere overnight. For me, it’s Airbnb. One day, I had never heard anyone even mention the name and the next everyone was talking about it.

At CXL Live, Morgan Brown of Inman confirmed that the key to unlocking that type of growth is high velocity testing…

Morgan Brown, Inman:

“Obviously, some of these companies like Facebook, LinkedIn, Uber, Airbnb are doing something different, right? They’re outpacing their peers by leaps and bounds.

I want to show you how they do it and what their growth process is. I’ve actually studied more than 20 of these fast-growing companies doing detailed research, interviews and case studies with them. They’re actually being turned into case studies to be published at Harvard Business School in the coming term to be taught.

The punchline, after all of that research, is rapid experimentation across the entire company is how these companies win and create breakout growth.”

While Twitter’s growth is currently disappointing investors, it grew rapidly from 2010 to 2012. Why? It had a lot to do with the fact that they exponentially increased their testing velocity. Twitter moved from 0.5 tests per week to 10…

Morgan adds that Twitter’s growth wasn’t the result of some wild growth hacking sorcery…

Morgan Brown, Inman:

“The tests that they were doing wasn’t anything crazy. It’s tests that all of you as optimizers in here would recognize as familiar.

They did many homepage tests, they also did things like fix form validation errors in non-western language countries that led to massive gains. All basic conversion optimization things.

The difference is that at such a scale and without people really noticing it, people turn around and say, ‘Wow, Twitter is growing like crazy. They’re growth hacking.’ Well, no, they just massively increased the velocity of their tests.”

In fact, GrowthHackers.com did something similar to Twitter.

In an article, Sean explains that they had hit a monthly active users (MAUs) plateau. In the first year, they had 90,000 MAUs. Without spending a dollar or increasing the size of their team by even an intern, they grew to 152,000 MAUs in just 11 weeks by dedicating themselves to high velocity testing.

Why is high velocity testing so effective?

As Claire Vo of Experiment Engine explained at CXL Live, it has a lot to do with shifting the focus from testing program outputs (wins and case studies) to inputs (speed and quality)…

Claire Vo, Experiment Engine:

“Those sophisticated CRO teams or teams that are moving along the maturity curve into a sophisticated program, really need to think beyond case studies. Not just because some of those case studies are just bad and don’t give you applicable insights into how to do testing, but they’re also not put in the context of all the other tests that the testing program ran.

So, when you see a million dollar case study, it looks really, really great. But if I told you that million dollar case study came after four years of testing and five million dollars of investment, you’d probably be a little less impressed with it.

I think you really need to take into context not just the test-by-test results, but really, how does your overall testing program perform and are you getting better at actually running your program? Not just are you getting better at individual tests? I think the way to do that is to focus on the inputs.”

What Goes Into High Velocity Testing?

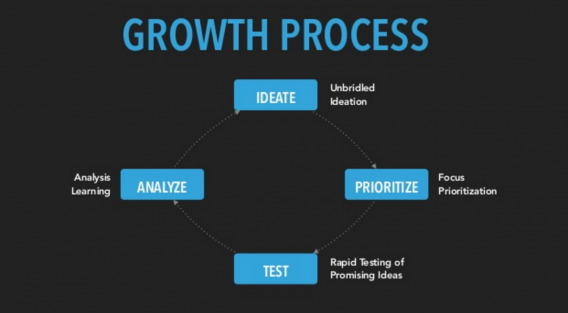

High velocity testing means moving through the growth process at a rapid pace. Morgan shared a basic outline of the growth process with us…

So, to master high velocity testing, you’ll need to go through each stage and optimize for speed.

1. Constant Ideation

If you want to run a lot of tests, you’re going to need a lot of test ideas. Sean explains why that too is sometimes easier said than done…

Sean Ellis, GrowthHackers.com:

“If one person is responsible for all ideation, they generally run out of ideas within a few weeks (at least ideas worth testing). Even within a dedicated team, ideation can become ad hoc and stagnate without a process in place that acts as a springwell of new ideas.” (via GrowthHackers.com)

We all know that the best way to come up with test ideas is to conduct conversion research. Another way to ensure there’s constant ideation is to involve the entire company in the process. Ask the engineers, ask customer support… get ideas from every corner of the company.

Of course, you can also generate ideas based on every step of the funnel. Here’s a look at pirate metrics (AARRR) as designed by Dave McClure of 500 Startups…

For optimizers, those descriptions are a bit different…

- Acquisition: Optimizing emails, PPC ads, etc.

- Activation: Optimizing for that first conversion.

- Retention: Optimizing for the second, third, fourth conversion.

- Revenue: Optimizing for actual money. (This is especially relevant for SaaS and lead gen sites.)

- Referral: Optimizing for current customers who are willing to tell a friend.

When most people think of optimization, they’re usually just thinking of the activation stage. That is, getting an email or a sale or whatever it might be. Fortunately, you have four other stages of the funnel to optimize as well.

If you’re conducting research, involving the entire company and expanding beyond optimizing for activation, you should have no shortage of ideas. In fact, your real issue will be idea overload.

2. Strategic Prioritization

Having a huge backlog of ideas doesn’t exactly sound conducive to speed. If those ideas are prioritized in a meaningful way, however, it is.

Now, prioritizing your ideas can be done in a number of ways. To better understand the process, it’s best to examine how other companies are prioritizing and the frameworks they’ve developed.

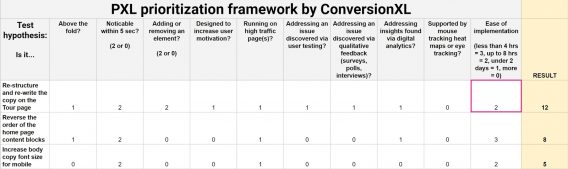

CXL’s PXL

There are a lot of different models for prioritization out there. While we found most of them helpful, we found each one was lacking in one way or another.

We wanted something that forced a yes or no, binary decision to remove subjectivity. Here’s what we ended up with…

We prefer this framework for three reasons:

- It makes the “potential” or “impact” rating more objective.

- It makes the “ease” rating more objective.

- It helps to foster a data-informed culture.

The model demands that everyone bring data to the prioritization discussion:

- Is it addressing an issue discovered via user testing?

- Is it addressing an issue discovered via qualitative feedback?

- Is the hypothesis supported by mouse tracking, heat maps or eye tracking?

- Is it addressing insights found via digital analytics?

You can read more about the framework and download a copy of the spreadsheet here.

GrowthHackers.com’s ICE

Sean and his team at GrowthHackers.com have built their prioritization framework, known as ICE, into Projects, a testing and experimentation program tool. Here’s how it works…

- Impact: If it works, how much of an impact will it have on KPIs and revenue?

- Confidence: How sure are you of that estimated impact?

- Ease: How easy is it to launch the test or experiment?

You give each of the three categories a number from 0 to 10, which spits out a number for the idea as a whole. For example…

- Impact: 7

- Confidence: 10

- Ease: 10

…results in a rating of 9. You’ll want to test that idea that’s rated 9 before moving on to an idea that’s rated 3.

(Note: This framework is inspired by Wayne Chaneski’s ICE framework.)

Bryan Eisenberg’s TIR

Bryan Eisenberg uses yet another framework for prioritizing ideas, which focuses on three factors…

- Time: How long will it take to execute?

- Impact: What’s the revenue potential, the anticipated outcome?

- Resources: What’s the cost of running the test or experiment?

Each factor is assigned a score from 1 to 5, 5 being the best. So, for example, if a project won’t take long, it would be given a 5 for the “Time” factor.

Next, multiply the three factors. So, the best possible score is 125 (5 x 5 x 5). The higher the score, the better, so start with the ideas that come closest to 125.

3. Smart Testing Management

Here are some more unfortunate statistics from our 2016 State of the Industry survey…

- 26% of respondents meet with their optimization team to discuss CRO “only when necessary”. Another 23% don’t meet more than bi-weekly.

- 41% of respondents say there is no one directly accountable for conversion optimization at their company.

What that tells us is that testing programs need some management help… fast.

Morgan explains why accountability matters and how to achieve it…

Morgan Brown, Inman:

“Growth is an organizational priority every single minute of every single day, so there has to be accountability for the growth team to this process.

We do it through this weekly growth meeting. It’s not a brainstorming meeting. This is more like an agile sprint planning or retrospective meeting from agile software development mashed together, but it drives the process and accountability.”

In fact, he suggests blocking off an entire hour of your time every week to…

- Review your KPIs and update your growth focus.

- Look at how many tests were launched, how many were not.

- Discuss key learnings from the tests run the previous week.

- Choose tests from the backlog for the upcoming week.

- Create a list of your favorite upcoming tests for future weeks.

- Recognize how many new ideas were submitted and the top contributor for the previous week.

You can download a sample growth meeting agenda, which Morgan shared at CXL Live.

Aside from meeting regularly, it’s important to manage resources and be realistic about your testing velocity…

Sean Ellis, GrowthHackers.com:

“Some growth experiments can be implemented by the marketing team, others by product managers and others require deep engineering skills. Balancing the workload of top priority experiments across different teams makes it much easier to hit our tempo goal. While our goal is to launch at least three tests per week, we generally shoot for five tests per week. That way if we hit roadblocks on some tests, we still hit the tempo goal.” (via GrowthHackers.com)

4. Insights > Wins

After the tests have been run, you need to archive the results for future learning. In my opinion, there are three core benefits to maintaining a thorough archive…

- You won’t repeat tests by accident. (This is very real issue for big teams and extensive programs.)

- It’s easier to communicate wins and learnings to clients, bosses and co-workers.

- You’ll emphasize learning from all tests, thus improving your knowledge and the quality of future tests.

If you’d like to learn more archiving your test results, we’ve written on it extensively in Archiving Test Results: How Effective Organizations Do It.

At CXL Live, Claire mentioned Hotwire‘s learn rate. Instead of focusing on the amount of tests that result in a win, Hotwire focuses on the amount of tests that result in an insight. See, a test doesn’t need to win for you to learn something about your audience or site.

That’s what an archive is all about; prioritizing learning and sharing those insights across the company.

Where Does High Velocity Testing Go Wrong?

In practice, you’re liking to run into a few issues with high velocity testing. It’s your responsibility to anticipate and prepare for these issues in advance.

While there are many, there are three obvious issues you’ll have to deal with: your culture might not support it, validity threats will creep in, and quality could begin to decline.

1. There’s No Culture of Experimentation

Your goal from day one should be to establish a culture of experimentation and data-driven growth. We’ve written an entire article, 6 Clever Nudges To Build a Culture of Experimentation, on how to do just that.

Some examples include…

- Ensuring optimization updates and insights are shared throughout the entire company.

- Encouraging, even gamifying, optimization at all levels.

- Celebrating failure and prioritizing exploration / learning.

Whatever you have to do, do it and do it often. Without a culture of experimentation, high velocity testing will fall flat.

Example: 1% Experiments

Josh Aberant of SparkPost, formerly Twitter, shared the concept of 1% experiments at eMetrics in San Francisco. Essentially, everyone at Twitter is authorized to run 1% experiments (i.e. experiments on 1% of the traffic), not just the growth team. You don’t need approval of any kind.

In fact, if you show up to a meeting with an executive without an insight from a recent 1% experiment, it’s a major faux pas.

Now, you likely don’t have 100 million users that you can run valid 1% experiments on, so I’m not encouraging you to start doing that. What I’m saying is simply that high velocity testing has to be a company-wide commitment.

2. Tests Are Called Too Soon & Other Validity Threats

If you read CXL regularly, you’re familiar with the concept of validity threats and sample pollution. If not, take the time to read more about how to minimize A/B test validity threats and how to manage sample pollution. It’ll be worth it, I promise.

High velocity testing can lead to tests being called too soon in the interest of speed. Of course, that’s a big no-no. Ton Wesseling of Testing.Agency explained why at last year’s EliteCamp…

Ton Wesseling, Testing.Agency:

“Your test has a fixed test length. You don’t stop it when it’s significant, you don’t let it run because it’s not significant. You calculate it upfront.

This test… we’re going to run it for 2 weeks, we’ll have so many conversions, we know we have to an impact of 8% or more (that’s what we designed the test for).

Then we test it for two weeks, stop it and look at the results.”

You can use the CXL AB test calculator to calculate how many people you need to reach before you can call your test one way or the other. Remember to test in full week increments to ensure you have a representative sample (e.g. day of week and time of day can have a major impact on results).

Of course, if you go too far in the opposite direction (i.e. call your test too late or wait for months to call it because of low traffic), you run into a similar problem. Ton puts it well…

Ton Wesseling, Testing.Agency:

“What people tend to do is delete their cookies. On average, you will lose 10% of your cookies in two weeks. That means if people re-enter your tests when they don’t have a cookie, they have a 50% chance of ending up in the wrong variation.

So, if you go on for one year, you know for sure both populations will be the same. There will be no significant difference between both groups; there will be no outcome.

That’s the reason why if you run an A/B test pretty long, you always see those conversion lines going more towards each other, more towards each other… you end up having nothing. At first, it made an impact, but in the end, it’s the same. You stopped the test too late. Don’t test over 4 weeks, in my opinion.

But calculate your cookie deletion; it can differ. If you have a login, awesome. You can test for many, many weeks.”

When you’re moving quickly, it’s easier for validity threats and sample pollution to rear their heads. Be sure you remain quick, but vigilant.

3. Quality Begins to Suffer

Earlier, I mentioned two inputs: quantity and quality. Typically, when you shift focus to one, the other begins to suffer. If you want your high velocity testing program to work, you’ll need to maintain both. To be frank, it doesn’t matter how quickly you test bullshit ideas.

When it comes to quality, Claire talks about three metrics you’ll want to focus on…

Claire Vo, Experiment Engine:

“I think these are the things we really need to think about.

1. Not just, ‘What is the success of any individual test?’ but am I running effective tests? So, are my tests as a whole, as a program, effective? What’s my win rate? What’s my average lift amount? What’s the expected value of the test that I’m going to run?

2. Am I running tests effectively? So, am I putting the right amount of resources, the correct type of resources, am I spending the right amount of time on tests and my ROI is positive?

3. And I think this is the thing that’s so important. Even if you’re tracking this things, if you don’t trend them over time and you’re not getting better at improving your quality, there’s probably something there or you’re getting worse.”

So, it’s less about the quality of individual tests and more about the quality of the testing program over time. After all, would you want the entire CXL community judging your CRO know-how based solely on the results of your last test?

Before you start your high velocity testing program, chart your quality based on the metrics above. Then, be aware of how quality is trending over time. You’ll know as soon as it begins to dip, so you can take immediate action.

How to Increase Your Testing Velocity

So, how do you go about increasing your testing velocity responsibly? According to Claire, it comes down to three factors: testing capacity, testing velocity and testing coverage. She explains…

Claire Vo, Experiment Engine:

“When I talk to teams about their testing programs, this is what I hear a lot. When I ask, ‘What’s the quantity of your testing program?’, I hear this. ‘We run x tests per month.’ So, we run 5 tests per month, we run 1 test per month, we run 50 tests per month.

I hear this a lot. I think what people usually mean when they say this in regards to their testing quantity is, ‘We run x tests per month… usually.’

Like, most of the time, we get 4 tests out the door every month, but June doesn’t really count and you shut down for December and last week was my birthday… I didn’t really want to work that hard.

So, really, there’s not a lot of rigour around tracking test quantity. And I think instead you should focus on these metrics.

1. How many tests can I run? So, before you even talk about how many tests you are running, what’s the possibility in the world for how many tests you can run… what’s your testing capacity?

2. How many tests are you running? What’s your testing velocity and coverage?

3. And then, the thing that I think we don’t track is: Am I getting any better? Am I trending this over time and am I getting better at it?

4. When I’m not doing tests, why? If it’s your birthday, fine, but at least be honest to yourself in reporting on it.”

Here’s how you can answer those very important questions that Claire is asking.

1. Testing Capacity

Your testing capacity is pretty simple. There are 52 weeks in a year, so you divide that by your average required test duration (in weeks). Then you multiply that number by the number of different pages / funnels that you can test at one time.

So, for example, if my traffic level typically indicates that I need to run tests for two weeks and I have ten different lead gen pages that I can test simultaneously, my testing capacity is 260 (52 / 2 * 10). That’s five tests a week.

If, for any reason, you are not using your full testing capacity, you’re losing money. So, calculate it and commit to a testing velocity that will ensure you’re not wasting your capacity.

2. Testing Velocity

How you measure your testing velocity depends on just how rapid your testing and experimentation is, Claire explains…

Claire Vo, Experiment Engine:

“Once you have your testing capacity, you really need to set a goal and then start tracking how well you’re going towards that testing capacity. That’s what we call testing velocity. So, how many experiments or tests are run per time period?

For very high velocity testing programs, I think you can measure this on a weekly basis. For very, very high velocity testing programs, you can measure this on a daily basis, but you probably also need a drink at the end of the day because that’s a lot of data to track.

But weekly, I think, for high traffic sites and high velocity testing programs is reasonable. And then, I think, for low traffic sites monthly is a really great way to track testing velocity.

Even if you’re getting one out the door every month on average, really looking at that month-over-month and saying, ‘When am I dropping to 0? When am I really able to do 4?’ Really figure out what your trends are on your testing velocity are going to let you really understand how you’re performing to your testing coverage-able.

I think the bonus is doing trend lines on this. So, if you want to get real fancy and impress me, do a tracking of the trend over time towards your goal.”

A key thing to note here is the trend over time. Is your velocity staying the same? Decreasing? Increasing? It’s not enough to know how many tests you’re running every month, you need to know whether that number is higher than it was the previous month. If you’re not getting better, you’re getting worse.

3. Testing Coverage

Once you have how many tests you can be running (testing capacity) and how many tests you are running (testing velocity), all that’s left is your testing coverage. Your testing coverage answers an important question: On what percent of testable days are you running a test?

Claire elaborates…

Claire Vo, Experiment Engine:

“How many of us know how much traffic we’re wasting on a regular basis? How many of us truly know, ‘I’ve wasted traffic over the past month’?

I think you really need to track that by measuring what’s called testing coverage. So, looking at all of the testable days in a calendar year. Maybe blacklist some days because those days are off limits for testing. The holidays for eCommerce, things like that.

But what percentage of testable days do you actually have a test live? This is a really powerful metric because what it calls out to you is the waste in your testing program. And you can really look at your program and say, ‘What is the waste of traffic I will tolerate in my testing program?’”

How many days has it been since you had zero tests running? When you’re not running a test, you have to ask yourself why. Why are you wasting time and traffic not testing? You don’t get that traffic back when you’re finally ready to launch a test.

The goal, of course, is to have 100% testing coverage. Actually calculating waste can be eye-opening and inspire that culture of experimentation that we talked about above.

Conclusion

Should you be moving fast when it comes to testing and experimentation? Absolutely. Should you be breaking things? No, quality is just as important as its sister input, quantity. Even Zuckerberg revised his philosophy to “move fast with stable infrastructure”. [Tweet It!]

To implement a high velocity testing program, you have to commit to…

- Constant ideation, which stretches across the entire company and covers the entire funnel.

- Strategic idea prioritization using one of the many frameworks available today.

- Smart testing management, which means weekly meetings to create accountability and managing resources effectively / realistically.

- Prioritizing learning and sharing thoroughly archived insights across the company.

- Avoiding the various pitfalls of high velocity testing (e.g. quality reduction, validity threats, etc.)

You’ll also need to know three very important metrics…

- Testing capacity, which is how many tests you can possibly run.

- Testing velocity, which is how many tests you are running weekly / monthly.

- Testing coverage, which is how many testable days you’re running a test.

What a fantastic article! Complicated topic for many, but so well put in simple terms!

Thanks Sandis! I really appreciate it.

This kind of content is what makes this blog the best CRO resource in the world.

Content about managing an optimisation program beats case studies and tactics any day.

Wow, thanks Joe. Really appreciated.

(And I couldn’t agree more re: program / process content.)

What everyone above said, one of the best articles this year, powerful stuff! Thanks so much :)

Thanks so much. I really appreciate the kind words.