How to Build Robust User Personas in Under a Month

Customer personas are often talked about in marketing and product design, but they’re almost never done well.

Customer personas are often talked about in marketing and product design, but they’re almost never done well.

A/B testing is fun. With so many easy-to-use tools, anyone can—and should—do it. However, there’s more to it than just setting up a test. Tons of companies are wasting their time and money.

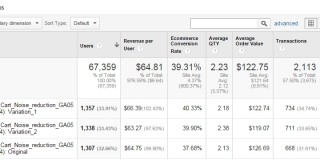

A/B testing tools like Optimizely or VWO make testing easy, and that’s about it. They’re tools to run tests, and not exactly designed for post-test analysis. Most testing tools have gotten better at it over the years, but still lack what you can do with Google Analytics – which is like everything.

Just when you start to think that A/B testing is fairly straightforward, you run into a new strategic controversy.

This one is polarizing: how many variations should you test against the control?

The traditional (and most used) approach to analyzing A/B tests is to use a so-called t-test, which is a method used in frequentist statistics.

While this method is scientifically valid, it has a major drawback: if you only implement significant results, you will leave a lot of money on the table.

As a digital analyst or marketer, you know the importance of analytical decision making.

Go to any industry conference, blog, meet up, or even just read the popular press, and you will hear and see topics like machine learning, artificial intelligence, and predictive analytics everywhere.

Because many of us don’t come from a technical/statistical background, this can be both a little confusing and intimidating.

But don’t sweat it, in this post, I will try to clear up a some of this confusion by introducing a simple, yet powerful framework – the intelligent agent – which will help link these new ideas with familiar tools and concepts like A/B Testing and Optimization.

Web personalization is all the rage, but are you trying to run before you’ve learned how to walk?

![The Hard Life of an Optimizer - Yuan Wright [Video]](https://cxl.com/wp-content/uploads/2015/06/yuanw1-320x160.jpg)

Here’s another presentation from CXL Live 2015 (sign up for the 2016 list to get tickets at pre-release prices).

While optimization is fun, it’s also really hard. We’re asking a lot of questions.

Why do users do what they do? Is X actually influencing Y, or is it a mere correlation? The test bombed – but why? Yuan Wright, Director of Analytics at Electronic Arts, will lead you through an open discussion about the challenges we all face – optimizer to optimizer.

![Your Test is Only as Good as Your Hypothesis [Video]](https://cxl.com/wp-content/uploads/2015/06/maagaard-1024x4771-320x160.jpg)

CXL Live 2016 is coming up next March (get on the list to get tickets at pre-release prices). We’re going to publish video recordings of the previous event, and here’s the first one.

You run A/B tests – some win, some don’t. The likelihood of the tests actually having a positive impact largely depends whether you’re testing the right stuff. Testing stupid stuff that makes no difference is by far the biggest reason for tests that end in “no difference”.

You have a hypothesis and run a test. Result – no difference (or even drop in results). What should you do now? Test a different hypothesis?