You have a hypothesis and run a test. Result – no difference (or even drop in results). What should you do now? Test a different hypothesis?

Not so fast.

Table of contents

A fairly common scenario

Let’s imagine that you conducted a qualitative survey, and a large number of respondents voiced concerns about your credit card payment page – they didn’t feel it was secure enough (even though it actually was secure). It caused anxiety in people, made them worry about the safety of their credit card number.

So now you would have solid data about the problem. It’d be a pretty safe bet to assume that by increasing the perception of security on the payment page, you should get more completed payments.

You create a treatment – add some security logos on there, some security-focused language – and run a test. The result: no difference.

So – does that mean your hypothesis was wrong?

Maybe, but the real question you should ask yourself is this: how many different ways are there to improve the perception of security on a page? How many different ways could we design a treatment addressing security concerns?

The answer: infinite.

So if you tested one possible implementation out of an infinite number of possibilities, what are the odds that you nailed it in your very first attempt? You would need a crystal ball to nail it in your first go.

The same goes for when your first test ended in an uplift. So you got a 5% revenue lift or whatever. How many better possible alternatives are out there?

If you test your hypothesis only once, you’re doing it wrong

Of course, not all hypotheses are equal. “By making the CTA button pink, we’ll get more clicks and thus more purchases” might qualify as a hypothesis, but in 99% of the cases will still be a stupid idea.

In order to keep running tests on a hypothesis, you need to have data that backs it up. The more different types of data inputs you have to support the hypothesis, the more confidence you can have in it. You can use ResearchXL framework or others to gather useful data.

When to have confidence in your hypothesis

While we can never be 100% certain in a hypothesis (even if the tests win – we won’t know why it actually worked, we’ll just have some possible explanations), there are ways to be more confident – so you’d know whether it makes sense to keep iterating.

This is what you’d like to see:

- In your heuristic analysis, you notice that the product page does a poor job communicating the value of a product (small, low-quality images, vague copy etc).

- Web analytics data shows that the product page visit to ‘add to cart’ ratio is around 1% – quite bad

- People in your user tests have problems with the page

- On-page survey responses point out clarity issues

- Post-purchase surveys tell you that lots of buyers hesitated cause they weren’t sure the product does that they need

- Click map data indicates that a massive percentage of visitors click through all the product images – possibly trying to get a better sense of it

In this scenario, we’d have 6 different sources of data possibly confirming our hypothesis that by getting better product images and more detailed product copy, we might be able to get more cart adds.

If you are confident in your hypothesis, don’t give up. There might not be anything there (your conclusions are wrong), but there might be a lot of money there.

Examples

Case study #1

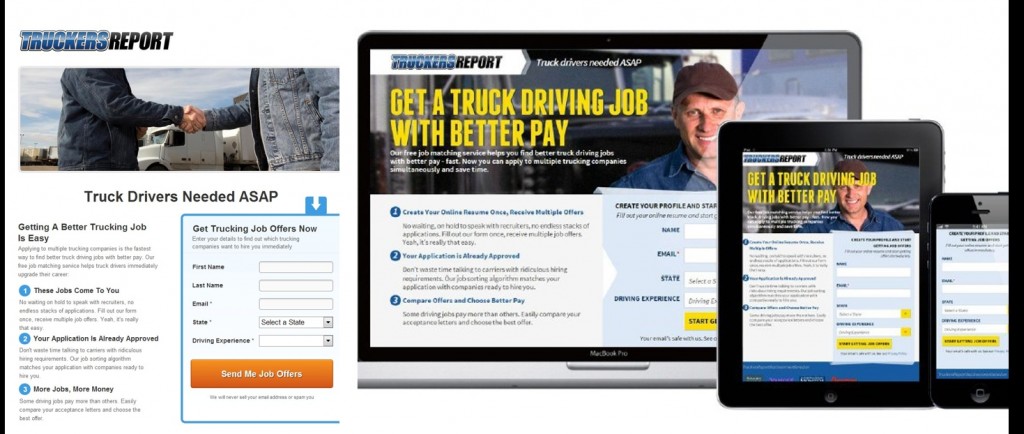

We were optimizing a landing page for truck drivers (goal: get more job applications for trucking companies).

After conducting heuristic analysis on the existing landing page, we identified that it was lacking it communicating value. Analytics data told us that half the people use it on their mobile devices – but it wasn’t a responsive page. And so on.

Based on our research, we designed a treatment (on the right):

The new treatment was value-focused, mobile responsive and looked better too.

We tested them against each other. The result: no difference.

What!?

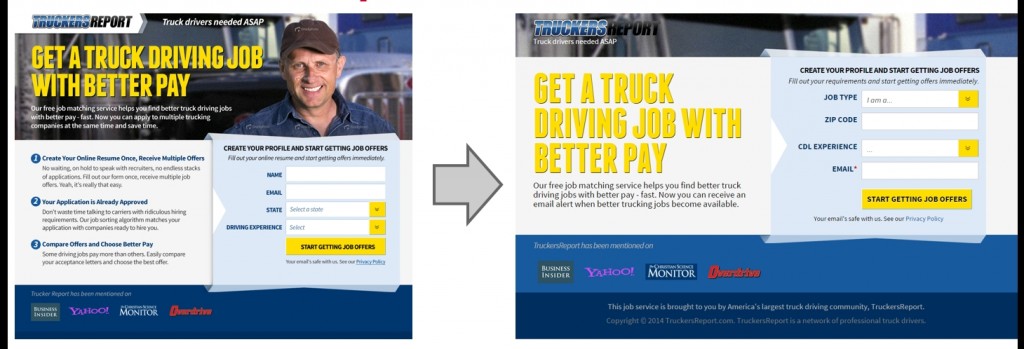

Was our hypothesis wrong? We looked at the data again and kept iterating. After four follow-up tests our original treatment was changed into the one on the right:

The result: 79.3% uplift.

We simplified the content presentation (half the people using it on mobile phones), removed content, played with form fields – but the underlying data and hypothesis remained unchanged. Read the full case study here.

Case study #2

Here’s an ecommerce site we worked on:

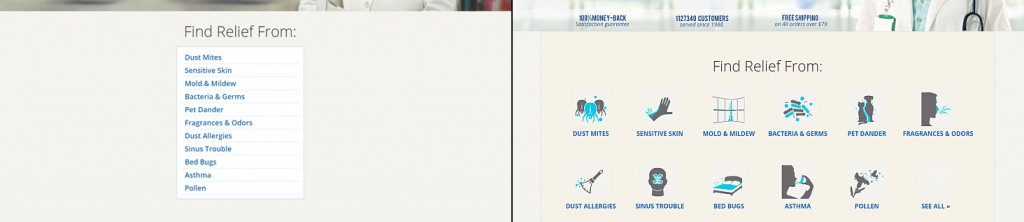

Based on our initial research into how people buy allergy relief products, we noticed that a lot of the users start their browsing with the condition they have in mind (e.g. find relief from dust allergies). So we designed the “Find Relief From” menu you can see on the left.

Are people using it? If so, how many and what’s the impact on their behavior? Those are the kinds of questions you should ask about all the features and widgets on your site.

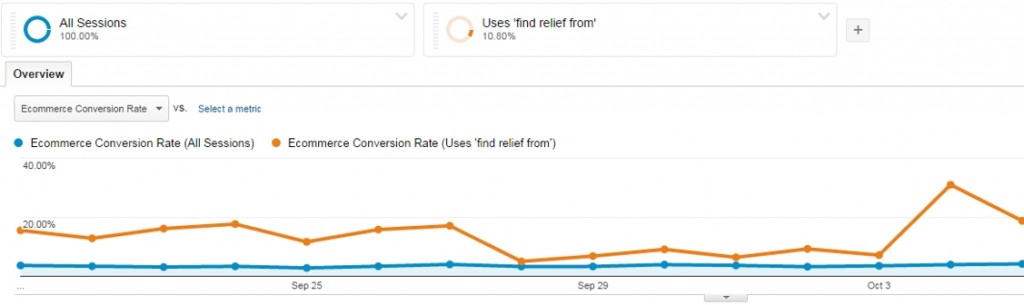

We checked Google Analytics, this is what we saw:

I can’t publish the actual numbers, but we learned that A) ~10% of the users used the feature, and B) those who did converted ~4x better.

4x is a lot. We didn’t know if it was cause or correlation. But we asked ourselves what would happen if we could get 20% of the users to use this feature? Would we make more money?

How to get more people to use it? We tested using visual hierarchy, making the menu orange (sitewide):

The result: almost 12% drop on conversions and even a bigger drop in revenue per visitor.

What? Was our hypothesis wrong?

We checked the analytics stats, and it turned out that the actual usage of the menu had actually not changed. We won’t know fully what caused the drop, our best theory is that the orange menu served as a distraction.

Let’s try another approach. We devised these two variations, menu options repeated under the hero image:

The variation on the right got more people to use the feature and improved overall revenue per visitor by 5%.

We’ve tested multiple different iterations since, and gotten more gains along the way.

Next time someone tells you “we tested that, didn’t work”…

… ask them how many iterations of the same idea did they test?

Nobody has a crystal ball, that’s why we test stuff. If you judge an idea (or an optimizer) based on the first test, you essentially expect clairvoyance. Have to break it to you – there is no such thing, at least in optimization.

Run multiple follow-up tests, test as many variations at once as your traffic allows – you can’t know in advance which specific implementation is going to work the best.

Conclusion

Iterative testing is the name of the game.

Run as many follow-up tests as needed to your data-driven hypothesis, and follow your winning tests the same way. If you tested something that worked, you might have discovered something that matters. Test different iterations of this to get even more results.

A better alternative is out there.

Very important concept Peep, great examples too.

The one thing that’s iffy for me is when do you stop? When are the potential gains from further improvement (on one specific test) offset by the opportunity cost?

Do you have some way of determining this, or do you typically rely on intuition?

There is no magic number, just a bunch of variables to consider:

– how confident are you in the hypothesis?

– how long will each test run? (cost of learning)

– what other tests might I run instead? any of them high potential?

I’d say typically I run 3-4 follow-up rounds, but it always depends on the specific context and the answers to the questions above.

Peep, love this post for 2 reasons:

1) It tells you not to give up on your hypothesis just cause one test showed no lift

2) BUT, you do this NOT for any random gut feeling “Guys, I really think the photo option is better”, but only when you have multiple datapoints that support the hypothesis.

Do you guys almost always have all 6 hypothesis “confidence” points you list above, or just a subset of the 6?

Thanks. Well you don’t necessarily need all 6 inputs.

For instance if you see most people in your user tests struggle with X, that’s kind of enough. (Of course some usability problems should just be fixed, not tested – depends on what the exact issue is).

The stronger / clearer the data from fewer data sources, the more confidence you can have in the hypothesis even with less inputs.