Developing organizational trust in your process and results is the most critical piece in the optimization process.

An organization that has been burnt by broken tests or disillusioned by a trough of losing tests won’t be able to maintain enthusiasm for its testing program. And a culture of experimentation is important, not only for your job security, but for the effectiveness of your testing program.

Thing is, teams keep making the same mistakes. I’ve seen them all countless times, and without fail, they destroy organizational support. But because you’re a smart marketer, hopefully you’ll heed the warnings and remember to avoid the following 5 mistakes.

1. Making Decisions Using Opinions Instead of Data

While knowledgeable and likely well-informed in the online landscape, CRO teams are not necessarily experts in site design and shouldn’t be the uncontested keeper of “best practices.”

If tests are developed because the CRO team thinks that kitschy headlines are more effective, or a strategist has a fondness for safety-vest-orange buttons, your organization’s testing strategy may be biased before it even gets going.

“Opinion is really the lowest form of human knowledge. It requires no accountability, no understanding.”

– Bill Bullard

Fortunately, people and consumers are predictable (some would say Predictably Irrational) enough to research and study. Using empirical psychological research to feed test ideas can lead to real conversion improvements – or at least create a place to start in your team’s test ideation process.

Confirmation bias is the tendency to read and interpret new evidence as a tool to confirm pre-existing beliefs, theories, and opinions. People latch onto their opinions and will cherry-pick data that supports those opinions, even if there is conflicting evidence.

Cherry-Picking: More Common Than You’d Think

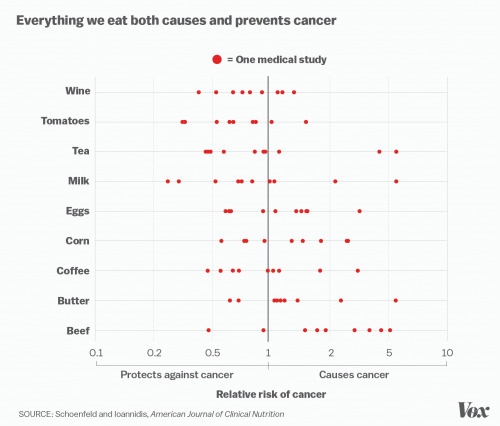

Take, for example, a selection of common foods and their association both with increase in cancer and decrease in cancer risk. In a study done for the American Society for Nutrition, researchers identified 40 common foods that have both studies and data published that claim that they prevent cancer as well as studies and data published claiming the exact opposite.

Someone with an agenda could easily cherry-pick the data to support their cause. If you can do it with something as serious as cancer risk, rest assured, you can cherry-pick your data in conversion optimization.

If an A/B test is designed around a singular opinion backed up by less-than-convincing evidence like this, at best it may yield valuable results by chance – which actually may prove to be a detriment when the guy with the opinion thinks he’s clairvoyant because he got one “right.”

At worst, you’re shooting blindfolded and will probably miss more often than not. And wasting valuable time and resources to develop experiments based on these opinions will quickly erode the organizational support for your team’s pursuits.

Furthermore, pitting egos (in the form of opinions) against each other is a solid way to ensure a faulty optimization program.

As most marketers know, the executive in the room has likely based his career on being right and having a solid knowledge of their craft. Data doesn’t care about opinions, though, and if the data shows a different answer than what the strong-willed executive thought, you’ve got a conflict.

There’s probably no better way to trigger a backfire effect (and thus kill a testing program in its infancy).

So how can you avoid these pitfalls? Some strategies…

Follow case studies and empirical research

While not all case studies can be trusted, they can provide inspiration, and in the right context, give you some good clarity on industry best practices. If you base your whole optimization strategy on WhichTestWon, you’ll probably be led astray, but reading up on how other programs operate can be helpful.

In addition, there are dozens of academic UX articles published each month and other empirical research and education available, such as CXL Institute. As they say, “all ideas are perishable,” so keeping up on what’s working will keep you ahead of the competition.

Create an internal repository of your test results

Archiving test results is so important.

For one, you’ll avoid testing the same thing over and over again by accident. Furthermore, it is a good way to analyze results you’ve seen and base iterations or new experiments from a solid foundation of previously gathered data. You can build an internal database of your team’s testing, or use a tool like Experiment Engine.

Do competitor site research

There are lots of ways to pull insights together for tests – one of which is to spy on your competition.

Don’t straight up copy your competitors (they probably don’t know what they’re doing either), but by peeking at their strategies, you can get a baseline idea of what the industry is doing.

This also creates an environment where other opinions from other organizations are considered valid, and can facilitate conversation without ego.

If you’ve studied business at all in an academic context, you know how to do competitive research. It’s not much different online, but you have different tools available to you, and the inputs are going to be slightly different.

Invesp has a great guide on how to do competitive CRO research.

Note: Don’t assume that your competitor has it right all the time. Their audience may look completely different than yours, or their data may not even be accurate. Use competitor analysis as a guide, but don’t lose focus on your own sites.

Follow The Scientific Method

Follow the scientific method of creating a hypothesis, or multiple hypotheses, and test against them.

As discussed by researchers at UC Berkeley, having multiple hypotheses will help keep personal egos at bay, since “a scientist with a single hypothesis has her ego at stake, and thus resists counter hypotheses made by other scientists.”

When employing the scientific method with due rigor, it falls on the data to confirm or deny the hypothesis instead of proving a particular person right or wrong.

2. Declining to Understand Who Your Users are Before Jumping Straight into A/B Testing

Is your testing random and general, or is it addressing your actual users’ actual needs? Before you can address that question, you must first understand:

- who your users are

- what they’re trying to do with your site.

How is your organization supposed to value your optimization recommendations if you aren’t sure who you’re optimizing for?

Learn About Your Users Before High-Velocity Testing

Avoid jumping into a high-velocity testing schedule without a specific rationale of your tests and a solid understanding of your users.

As arbitrary as most companies’ ‘personas’ are, if you create customer personas with real data, you solve two things:

- You can create better tests with a more empirical understanding of your users.

- You can communicate this understanding to execs, which solidifies trust in your testing program.

How to do user research and personas the right way?

If you’re lucky, your organization will have data scientists or analysts that can help you understand what this landscape looks like. They’ll have sophisticated methods like cluster analysis, cohort analysis, and advanced data mining techniques to learn about your customers.

Otherwise, you can get some pretty clear insights with a good understanding of Google Analytics. Even better if you use a tool like Amplitude or Heap Analytics to gather data on behavioral cohorts and weed out correlations in different user groups.

With these analytics tools, you can also do a pretty good job of mapping out user journeys through your site. Couple this with session replays and user testing, and maybe some heat maps, and you’ve got a much clearer picture of how people are using your site.

Add in on-site surveys (you can cluster these as well) and customer surveys, and you’re ahead of most of your competitors in understanding 1) who your customers are and 2) what they want to accomplish.

3. Assuming That all Website Visitors Think the Same Way

Not all customers in a segment or demographic think the same way, so stop seeking simplistic narratives.

Assuming that the models and theories that exist (for example, the four modalities of a consumer) are to be treated as fact is a slippery slope to invalid assumptions about your audience. These models and theories are helpful, and provide a very good starting foundation, but take care not to treat them as “CRO law.”

For example, you can have a series of 35-year-old males from the same city who all need the same product (that your site happens to sell!). However, jumping to the conclusion that they all need to use your site in the same way is a pitfall that may lead your CRO team astray in your process.

In a simple example, Male A may want to purchase today, while Male B needs to see if he should fix the product he already has before purchasing a new one. Meanwhile, Male C may still be in the researching phase to see which product is right for him. All of these users are going to use the site in different ways and have different opportunities for optimization.

In other words, someone could fall into the “competitive” bucket today at 1pm, but shift into spontaneous on Thursday night at 8pm. People are more complicated than their astrology signs or the labels we create for them.

Clearly, it’s crucial to understand the specificities of a customer and optimize for them specifically… but how?

Use the same research methods recommended in section 2 to identify how customers are flowing through the site, what portions are confusing or complex to them, where users are diverting from the intended site path, and optimize towards the “desire paths” that your users have created for themselves.

Essentially, seek empirical support – even if you use personas or frameworks for inspiration.

User test radical redesigns in a small focus group or by using online tools (UsabilityHub, etc.) to get feedback prior to launching an A/B test.

For your organization, it’s reassuring that your A/B testing is based on feedback that you’ve received from external sources, not just opinions, arbitrary frameworks, or “throwing things against the wall to see what sticks.” An added benefit of using focus groups or user research software is that the user is able to tell you the “why” of their interactions, instead of you needing to make your best guess at their motives.

Use site session recording software (Mouseflow, HotJar, etc.) to actually watch your users interact with your site. You’ll be able to see the pain points that your users run into, watch them try to click things that aren’t buttons, and then address your site as needed to make the user experience as smooth as possible.

And of course, try to learn as much about consumer psychology as you can. The journals are good (but boring), so read some of the popular psychology books:

- Predictably Irrational

- Thinking Fast and Slow

- Waiting for Your Cat to Bark?

- Hooked: How To Build Habit Forming Products

4. Forgetting (or Neglecting) to QA the Test that You’ve Set Up

Nothing is more disappointing than a well-researched hypothesis that fails because of a quality assurance issue with the test itself.

It’s exciting to launch tests at a high velocity, but it’s equally important to maintain quality standards (avoid breaking things). Pushing tests live without confirming that they are going to yield accurate data is a quick path to losing your organization’s trust in your tests (and your team, by proxy).

Using an official QA process can help mitigate these risks

At Clearlink, our CRO team creates a thorough document of all of the variables that our experiments address, the goals that our software is recording during the test, and the target audience. Then we pass this document and all variation pages over to our internal QA team to confirm before we ever live-launch a test.

If possible, utilize the organization’s QA team to look through your test pages. An internal QA team is (hopefully) already familiar with the organization’s pages and structure, and they’re the best resource to find issue with your tests within the landscape of the existing site structure.

If you don’t have an internal QA team, there are options for outsourcing (for example, with RainforestQA). Even if you don’t have an internal QA team, you still need to find a way to check your tests for errors.

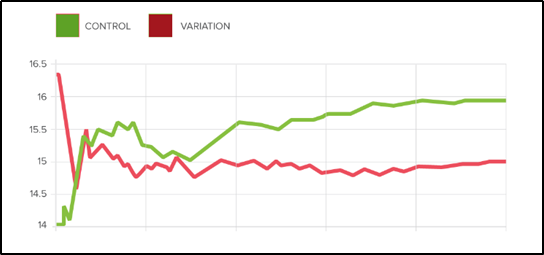

After launching a test, watch the results carefully for the first few days before you let the test run the rest of its course. If the data looks really weird, that can be a quick indication that there is an issue with test setup.

For example, my team ran a very straightforward CTA language test. The next day, we saw a 40% decrease in sales—it turned out that our variation page had a broken dynamic variable that recorded sales data. There’s no way to tell the rest of the organization those results, because even our team didn’t trust them. A simple QA error led to essentially useless test results.

5. Avoiding Admitting the Hard Truth When a Test Fails Miserably and Costs You Real Money

In the example used above, our variation pages were causing a 40% decrease in sales. Lying about our results or skewing the data to look less tragic was definitely an option, but we knew that our testing program would be at risk if our organization didn’t trust us to be honest with our results. (In the case above, we re-ran the test to provide real results that the company could use).

You didn’t “fail” – you learned

Frame a test loss as a learning opportunity. This is easy, because it is a learning opportunity.

Say you run a valid test and the variation loses by 50%. Not only did you mitigate the risk of the company skipping A/B testing and just implementing (therefore dropping revenues sitewide). But you learned something essential about your audience – what you changed mattered. There was a notable difference in how they behaved based on your changes.

Make sure your organization knows that testing has some inherent risk, but also significantly mitigates the risk of test-free implementation. Deploying changes site-wide without testing is equivalent to running an A/B test with 100% of traffic to the new variation—not something most CRO teams would recommend.

Conclusion

There are a lot of ways that your CRO team can destroy the trust that your organization has in you.

Fortunately, there are just as many ways to avoid those pitfalls, and create a testing program that the organization believes in and that provides resources towards. Understanding the importance of organizational trust and buy-in is crucial to a CRO program or company.

Guidelines to maintain organizational trust in your testing team:

- Avoid letting ego cloud your test ideas and strategy.

- Understand your unique demographics data – the “who” – before jumping straight into A/B testing.

- Learn how your website visitors think and use your site – the “why”.

- QA the test that you’ve set up before you launch it.

- Be honest with all results and promote a culture of experimentation and learning in the organization.