Keyword research, high-quality content, link building, on-page SEO—these tactics help you improve organic rankings. But search engine optimization strategies will only take you so far.

Getting your technical SEO house in order is the final piece of the puzzle in site ranking.

Technical SEO is about giving your searchers a top-quality web experience. People want fast-loading web pages, stable graphics, and content that’s easy to interact with.

In this article, we’ll explore what technical SEO is and how it impacts website growth.

Table of contents

What is technical SEO (and how does it impact growth)?

Technical SEO is the process of making sure your website meets the technical requirements of search engines in order to improve organic rankings. By improving certain elements of your website, you’ll help search engine spiders crawl and index your web pages more easily.

Strong technical SEO can also help improve your user experience, increase conversions, and boost your site’s authority.

The key elements of technical SEO include:

- Crawling

- Indexing

- Rendering

- Site architecture

Even with high-quality content that answers search intent, provides value, and is ripe with backlinks, it will be difficult to rank without proper technical SEO in place. The easier you make life for Google, the more likely your site will rank.

It’s been suggested that the speed of a system’s response should mimic the delays humans experience when they interact with one another. That means page responses should take around 1-4 seconds.

If your site takes more than four seconds to load, people may lose interest and bounce.

Page speed doesn’t just impact user experience, but could also affect sales on ecommerce sites.

As Kit Eaton famously wrote in Fast Company:

“Amazon calculated that a page load slowdown of just one second could cost it $1.6 billion in sales each year. Google has calculated that by slowing its search results by just four-tenths of a second they could lose 8 million searches per day—meaning they’d serve up many millions fewer sponsored ads.”

Proof that a slow-loading site, just one element of technical SEO, can directly impact business growth.

Should you use a technical SEO consultant or do your own audit?

You should conduct a technical SEO audit every few months. This helps you monitor technical factors (that we’re about to dive into), on-page factors like site content, and off-page factors like backlinks.

Tools like Ahrefs make running your own audit easier. They’re also cheaper and faster than hiring a technical SEO consultant. A consultant may take weeks or even months to complete an audit, whereas conducting your own can take hours or days.

That said, expert insights from an experienced consultant can be important if you:

- Are deploying a new website and want to make sure it’s set up properly

- Are replacing an old site with a new one and want to ensure a seamless migration

- Have multiple websites and need insights into whether you should optimize them seaprately or merge them together

- Run your web pages in multiple languages and want to ensure each version is properly set up

Either way, to get technical SEO right, prioritize a well-rounded understanding of the basics.

1. Crawling and indexing

Search engines need to be able to identify, crawl, render, and index web pages.

Website architectures

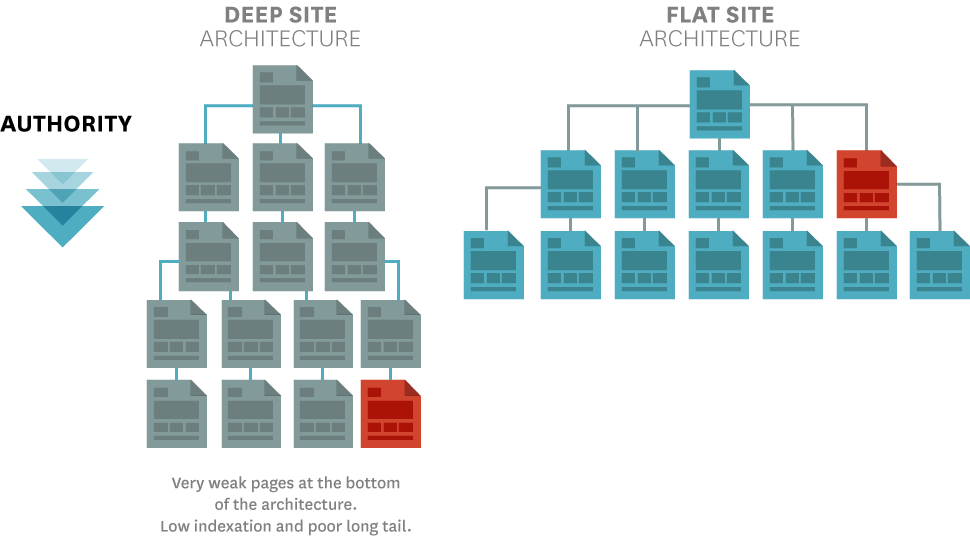

Web pages that aren’t clearly connected to the homepage are harder for search engines to identify.

You likely won’t have any problem getting your homepages indexed. But pages that are a few clicks away from the homepage often cause crawling and indexing issues for a number of reasons, especially if there are too few internal links pointing to them.

Flat website architectures where web pages are clearly linked to each other helps avoid this outcome:

Using a pillar page structure with internal links to relevant pages can help search engines index your site’s content. As homepages often have a higher page authority, connecting them makes pages more discoverable to crawling search engine spiders.

XML sitemaps

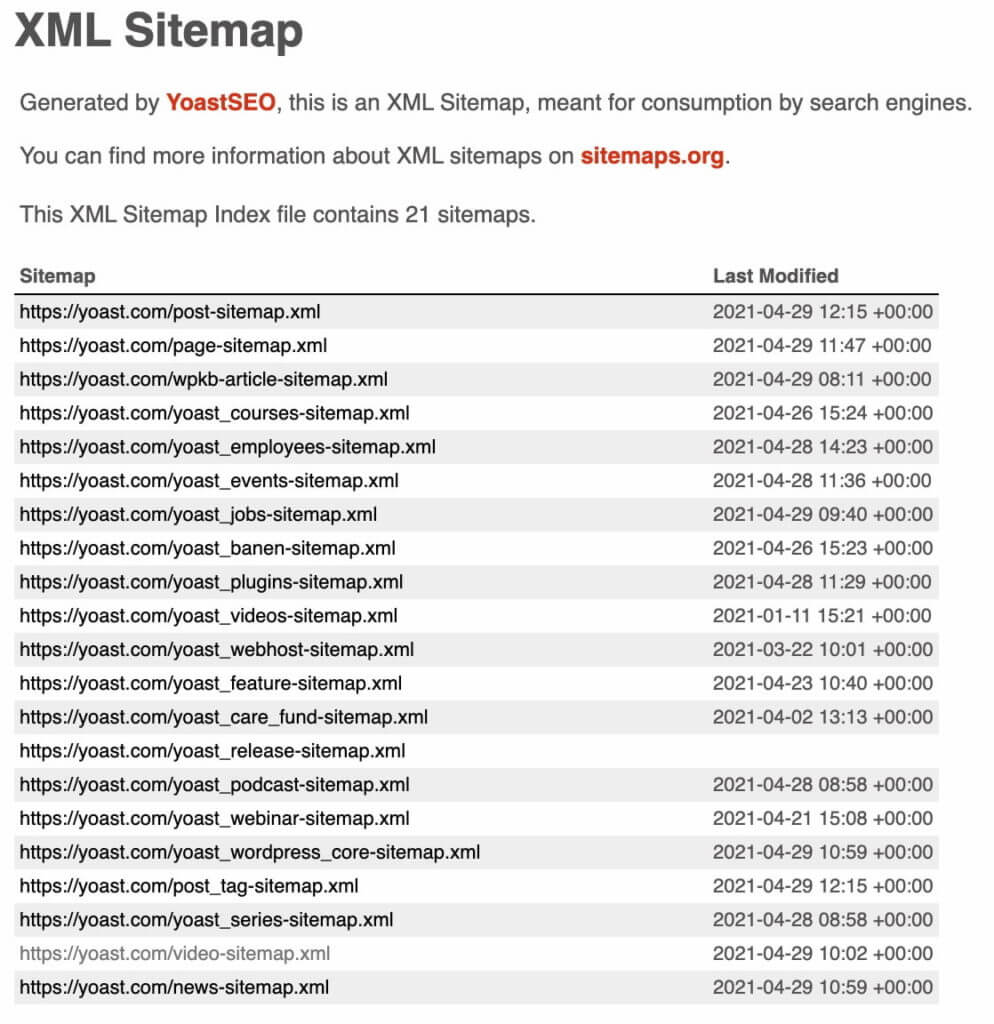

XML sitemaps provide a list of all the most important pages on your site. While they may seem redundant in lieu of mobile responsiveness and other ranking factors, Google still considers them to be important.

XML sitemaps tell you:

- When a page was last modified

- How frequently pages are updated

- What priority pages have on your site

Within Google Search Console, you can check the XML sitemap the search engine sees when crawling your site. Here’s Yoast.com’s XML sitemap:

Use the following tools to regularly check for crawl errors and to see if web pages are indexed by search engine spiders:

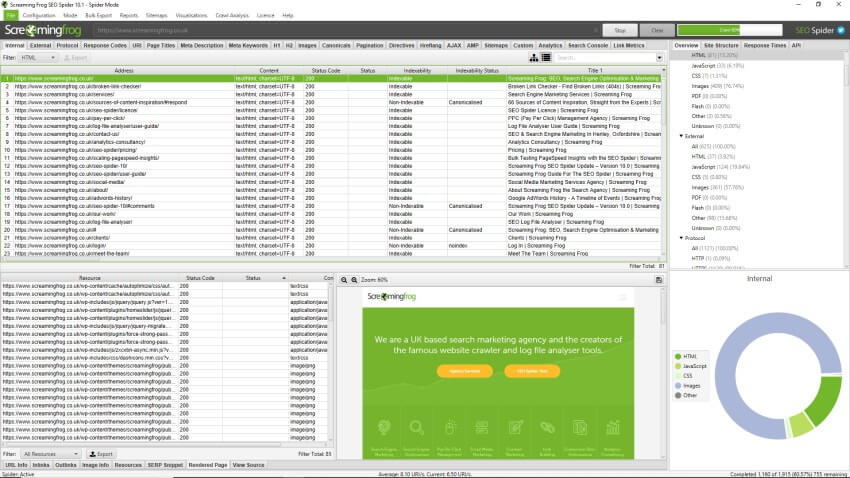

Screaming Frog

Screaming Frog is the industry gold standard for identifying indexing and crawling issues:

When you run it, it extracts the data from your site and audits for common issues, like broken redirects and URLs. With these insights, you can make quick changes like fixing a 404 broken link, or plan for longer-winded fixes like updating meta descriptions site-wide.

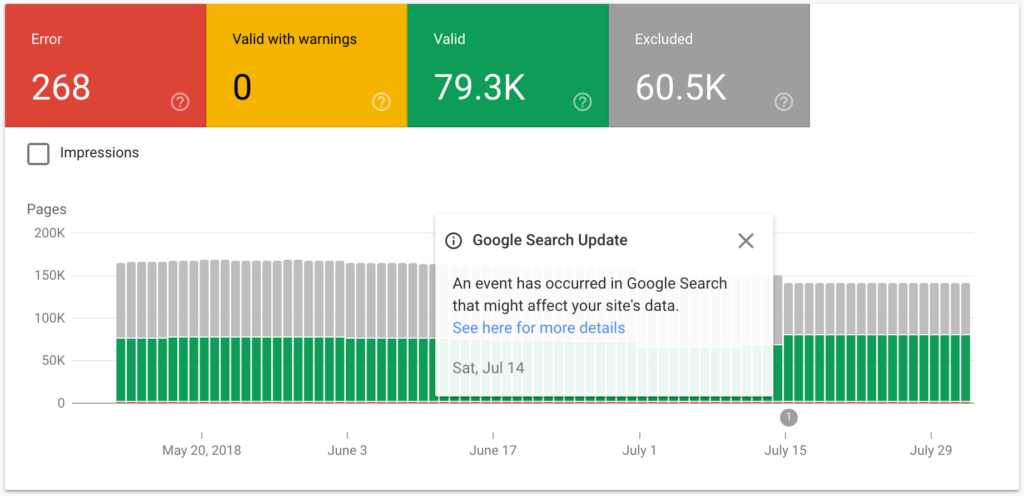

Coverage report and Check indexing with Google Search Console

Google Search Console has a helpful Coverage report that tells you if Google is unable to fully index or render pages that you need to be discovered:

Sometimes web pages may be indexed by Google, but incorrectly rendered. That means that although Google can index the page it’s not crawling 100% of the content.

2. Site structure

Your site structure is the foundation of all other technical elements. It will influence your URL structure and robots.txt, which allows you to decide which pages search engines can crawl and which to ignore.

Many crawling and indexing issues are a result of poorly thought-out website structures. If you can build a clear site structure that both Google and searchers can easily navigate you won’t have crawling and indexing issues later on.

In short, simple website structures help you with other technical SEO tasks.

A neat site structure logically links web pages so neither humans nor search engines are confused about the pages’ order.

Orphan pages are those that are cut off from other pages due to not having internal links. Site managers should avoid these because they’re vulnerable to not being crawled and indexed by search engines.

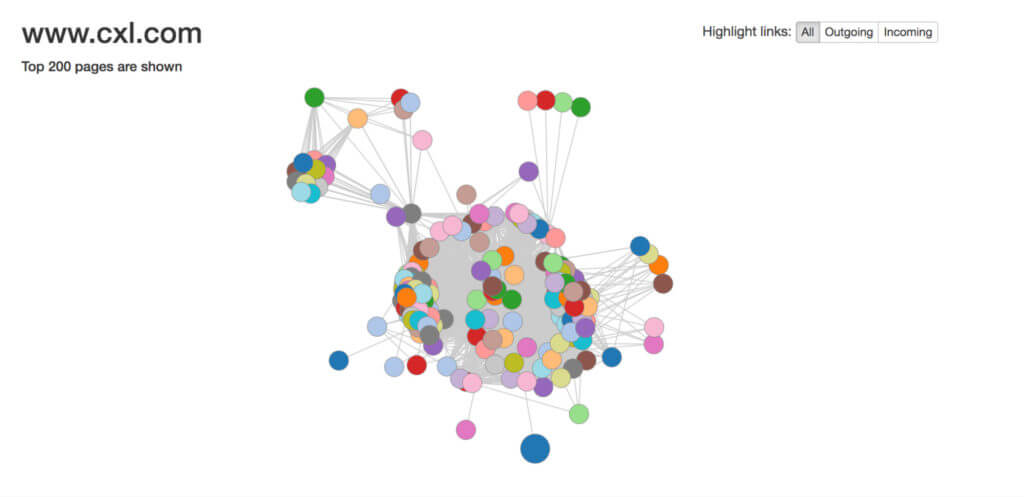

Tools like Visual Site Mapper can help you see your site structure clearly and assess how well pages are linked to each other. A solid internal linking structure demonstrates the relationship between your content for Google and users alike:

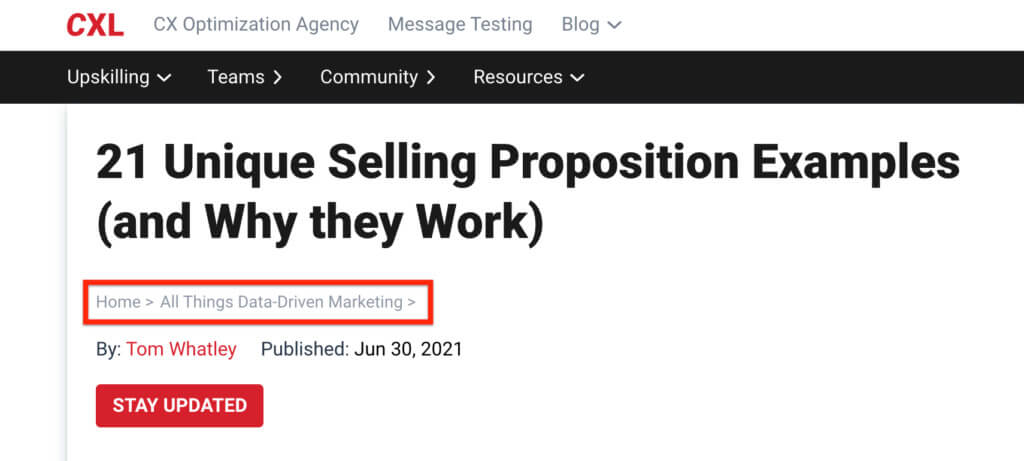

Breadcrumbs navigation

Breadcrumbs navigation is the equivalent of leaving lots of clues for search engines and users about the kind of content they can expect.

Breadcrumbs navigation automatically adds internal links to subpages on your site:

This helps clarify your site’s architecture and makes it easy for humans and search engines to find what they’re looking for.

Structured data

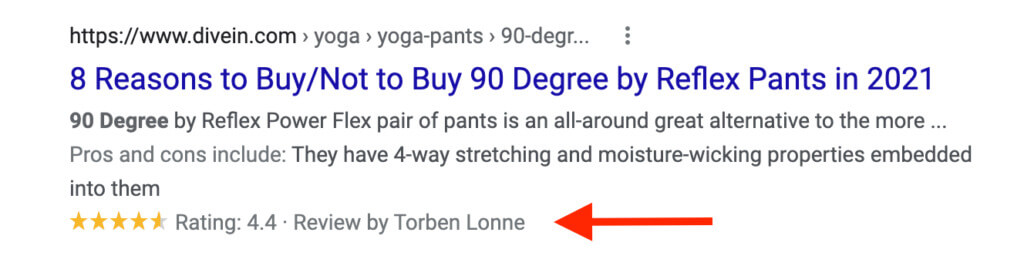

Using structured data markup code can help search engines better understand your content. As a bonus, it also enables you to be featured in rich snippets which can encourage more searchers to click through to your site.

Here’s an example of a review rich snippet in Google:

You can use Google’s Structured Data Markup Helper to help you create new tags.

URL structure

Long complicated URLs that feature seemingly random numbers, letters, and special characters confuse users and search engines.

URLs need to follow a simple and consistent pattern. That way, users and search engines can easily understand where they are on your site.

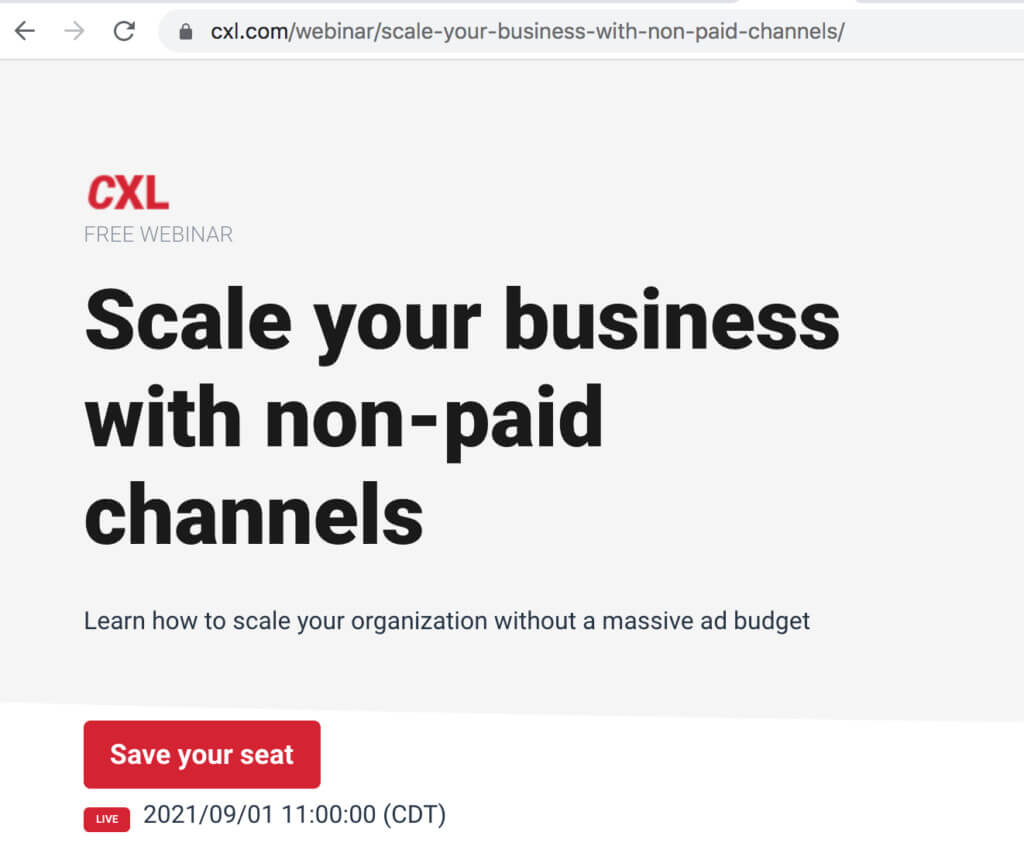

On CXL, all the pages on the webinar hub include the /webinar/subfolder to help Google recognize that these pages fall under the “webinar” category:

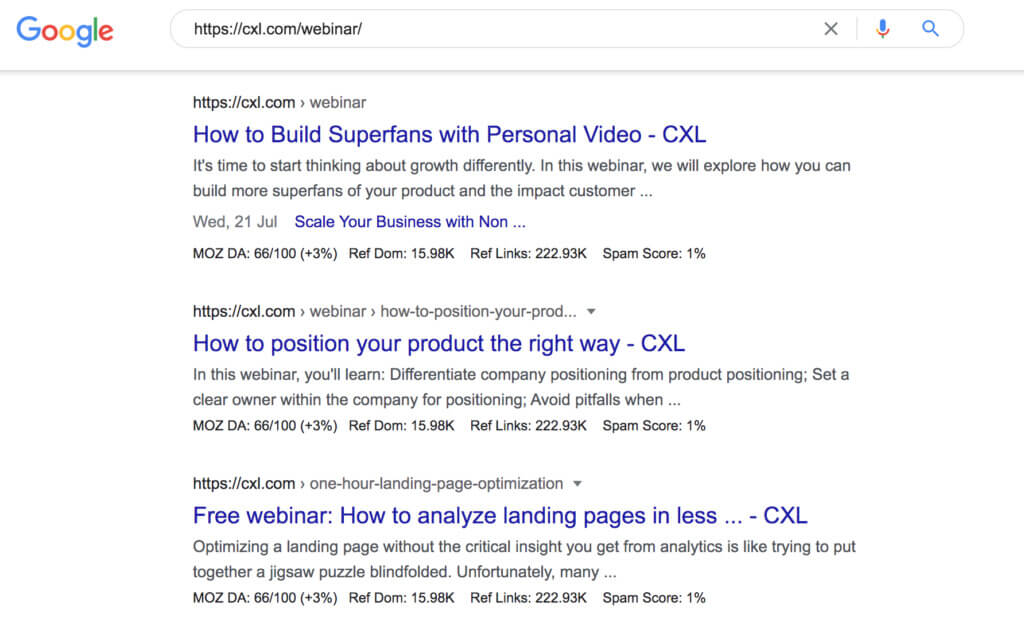

Google clearly recognizes it this way, too. If typing https://cxl.com/webinar/ into the search bar, Google pulls up relevant webinar results that fall under this folder:

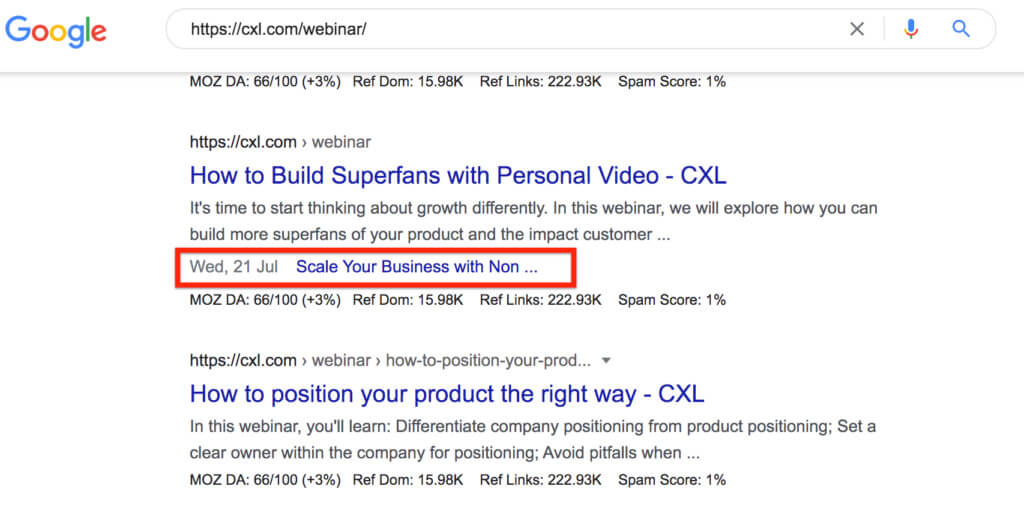

Site links within the results also show that Google recognizes the categorization:

3. Content quality

High-quality content is undoubtedly one of your site’s strongest assets. But there’s a technical side to content, too.

Duplicate content

Creating original, high-quality content is one way to ensure sites don’t run into any duplicate content issues. Duplicate content can be a problem even where the standard is generally high.

If a CMS creates multiple versions of the same page on different URLs, this counts as duplicate content. All large sites will have duplicate content somewhere—the issue is when the duplicate content is indexed.

To avoid duplicate content from harming your rankings, add the noindex tag to duplicate pages.

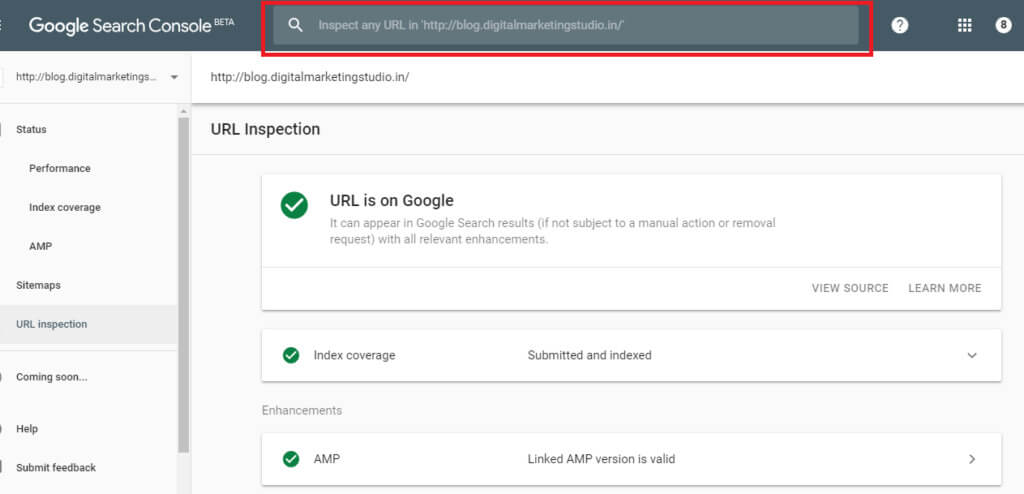

Within Google Search Console, you can check if your noindex tags are set up by using Inspect URL:

If Google is still indexing your page, you’ll see a “URL is available to Google” message. That would mean that your noindex tag hasn’t been set up correctly. An “Excluded by noindex tag” pop-up tells you the page is no longer indexed.

Google may take a few days or weeks to recrawl those pages you don’t want indexed.

Use robots.txt

If you find examples of duplicate content on your site, you don’t have to delete them. Instead, you can block search engines from crawling and indexing it.

Create a robots.txt file to block search engine crawlers from pages you no longer want to index. These could be orphan pages or duplicate content.

Canonical URLs

Most web pages with duplicate content should just get a noindex tag added to them. But what about those pages with very similar content on them? These are often product pages.

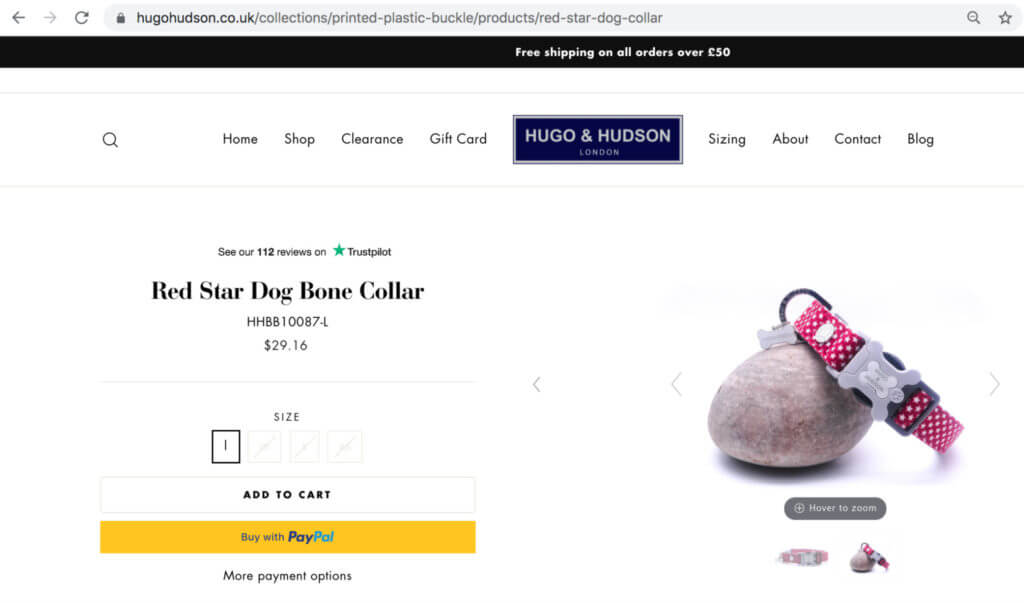

For example, Hugo & Hudson sells products for dogs and hosts similar pages with slight variations in size, color, and description:

Without canonical tags, listing the same product with slight variations would mean creating different URLs for each product.

With canonical tags, Google understands how to sort the primary product page from the variations and does not assume variant pages are duplicates.

4. Site speed

Page speed can directly impact a page’s ranking. If you could only focus on one element of technical SEO, understanding what contributes to page speed should be top of the list.

Page speed and organic traffic

A fast-loading site isn’t the only thing you need for high-ranking pages, but Google does use it as a ranking factor.

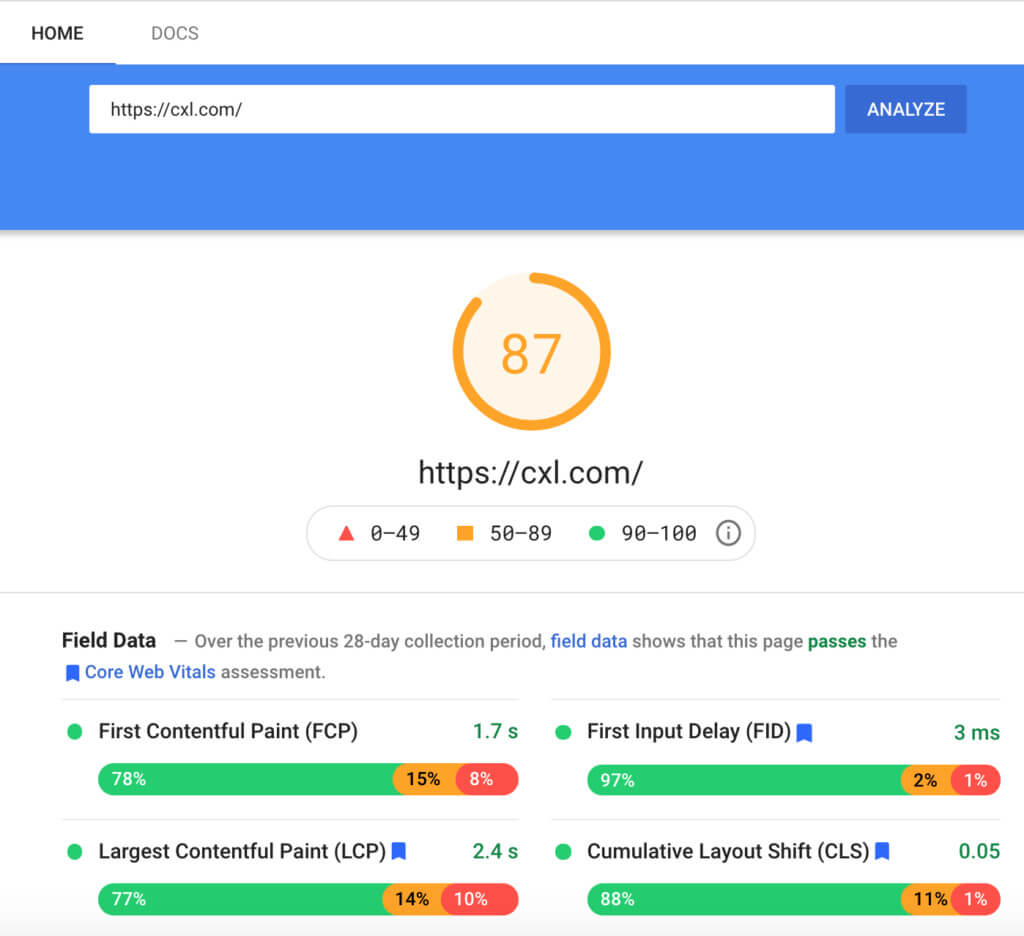

Using Google PageSpeed Insights, you can quickly assess your website’s loading time:

CDNs and load time

CDNs or content delivery networks reduce the overall data transfer between the CDN’s cache server and the searcher. As it takes less time to transfer the file, the wait time decreases and the page loads faster.

But CDNs can be hit and miss. If they’re not set up properly, they can actually slow your website loading times down. If you do install CDNs, make sure to test your load times both with and without the CDN using webpagetest.org.

Mobile responsiveness

Mobile-friendliness is now a key ranking factor. Google’s mobile-friendly test is a simple usability test to see if your web pages are operative and provide a good UX on a smartphone.

The web app also highlights a few things site managers can do to improve their pages.

For example, Google recommends using AMPs (Accelerated Mobile Pages) which are designed to deliver content much faster to mobile devices.

Redirects and page speed

Redirects can improve sites by ensuring there aren’t broken links leading to dead-end pages or issues surrounding link equity and juice. But lots of redirects will cause your website to load more slowly.

The more redirects, the more time searchers spend getting to the desired landing page.

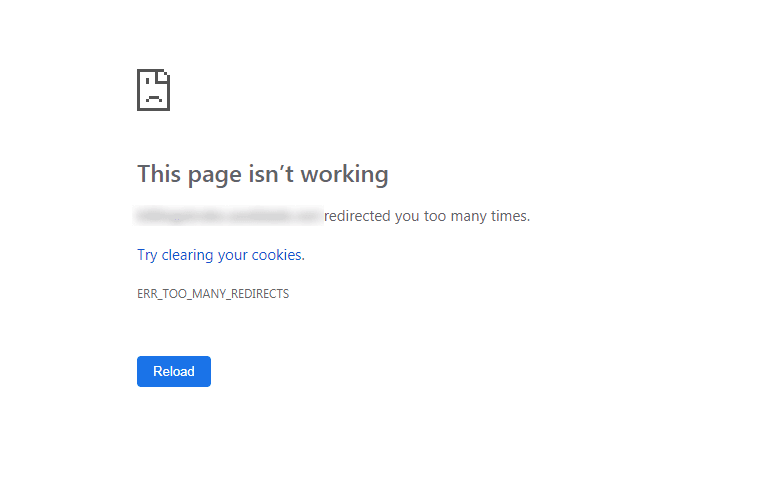

Since redirects slow down website loading times, it’s important to keep the number of page redirects low. Redirect loops (i.e. when multiple redirects lead to the same page) also cause loading issues and error messages:

Large files and load time

Large file sizes like high-res photos and videos take longer to load. By compressing them, you can reduce load time and improve the overall user experience.

Common extensions like GIF, JPEG, and PNG have lots of solutions for compressing images.

To save time, try compressing your images in bulk with dedicated compression tools like tinypng.com, and compressor.io.

Aim for PNG image formats as they tend to achieve the best quality to compression ratio.

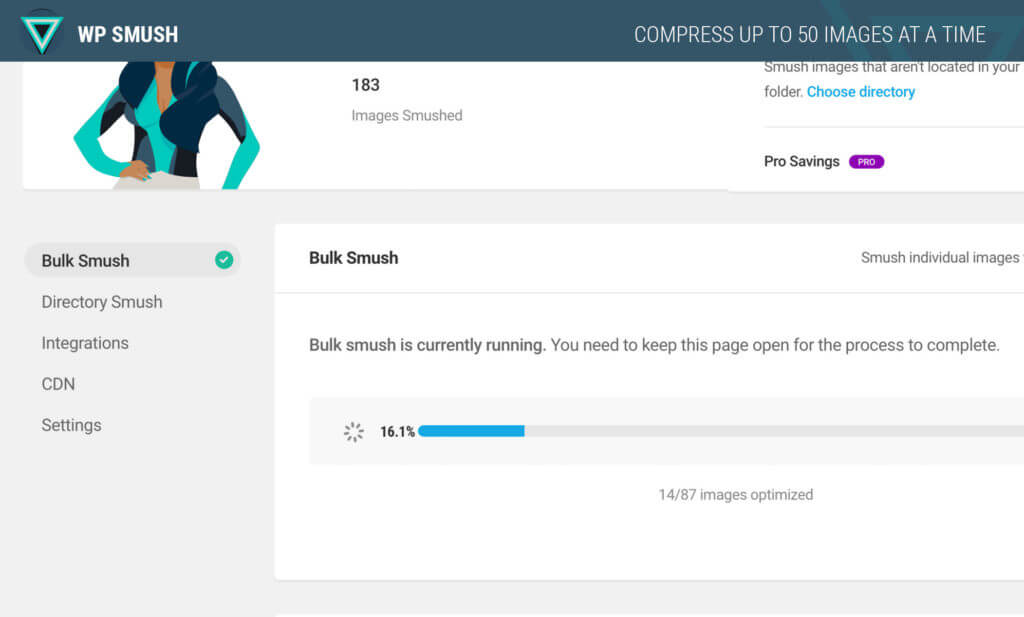

WordPress plugins like SEO Friendly Images and Smush can also guide you on how to compress your images:

When using image editing platforms, selecting the “Save for web” option can help ensure that images aren’t too big.

It’s a juggling act, though. It’s all very well compressing all your large files but if it negatively impacts user experience by showing a poor quality web page, it would be better not to compress the files in the first place.

Cache plugins and storing resources

The browser cache saves resources in a visitors computer when they frequent new websites. When they return for a second time, these saved resources serve the needed information at a faster rate.

Combining W3 Total Cache’s caching and Autoptimize’s compression can help you improve your cache performance and load time.

Plugins

It’s easy to add multiple plugins to your site—but take care not to overload it as they can add seconds to your pages’ load time.

Removing plugins that don’t serve an important purpose will help improve your page’s load time. Limit your site to only running off plugins that add tons of value.

You can check if plugins are valuable or redundant through Google Analytics. In a test environment, manually turn plugins off (one at a time) and test load speed.

You can also run live tests to see if disabling certain plugins affects conversions. For example, turning off an opt-in box plugin and measuring if that affects opt-in rate.

Alternatively, use a tool like Pingdom tools to measure if plugins are affecting loading speeds.

Third-party scripts

On average, each third-party script adds an additional 34 seconds to page load time.

While some scripts like Google Analytics are necessary, your site may have accumulated third-party scripts that could easily be removed.

This often happens when you update your site and do not remove defunct assets. Artifacts from old iterations waste resources and affect speed and UX. You can use a tool like Purify CSS to remove unnecessary CSS, or ensure your developers check and purge consistently.

Conclusion

OPtimizing technical SEO isn’t a one-size-fits-all process. There are elements that make sense for some websites but not all, and this will vary based on your audience, expected user experience, customer journey, goals, and the like.

By learning the fundamentals behind technical SEO you’ll be more likely to create and optimize web pages for Google and visitors alike. You’ll also be able to perform technical SEO audits or understand a professional’s methods.

Ready to outrank the competition with technical SEO? Sign up for the CXL technical SEO course today.