What a rush—24 speakers over three days at a picturesque resort. Add to that 400 old and new faces, plenty of conversations, live music, and more than a few beers. CXL Live is an experience.

There was this, too: Our opening video that gave away all the secrets of growing…growth:

If you’re wondering what you missed, here are highlights from each of the sessions at CXL Live this year.

Els Aerts: “The Lost Art of Asking Questions”

- Surveys don’t suck, but most surveys suck. They can work if:

- You get your research questions right.

- You stop focusing on presenting “numbers” and present information.

- How to ask the right questions:

- Don’t ask about the future (e.g. “If we added Feature X, how likely would you be to buy Y?); your users are not psychics.

- Don’t ask about things that are too far in the past—human memory is extremely unreliable.

- Don’t ask leading questions. (e.g. Don’t ask how “good” something was; ask how “good or bad,” or simply “how” it was.)

- Bias questions toward the negative can deliver more feedback (e.g. “How hard was it to…”).

- Where and when you deliver a survey can impact results—if it’s a site section where the user is experiencing pain, it will bias responses.

- Confirmation and thank-you pages are great opportunities to ask people questions. (e.g. Did you consider a competitor? If so, what characteristics do we have that made you choose us over them?)

- When setting up interviews, call them “chats,” not interviews—and treat them like chats; anticipate where the conversation could go and listen, listen, listen.

Joanna Wiebe: “Writing Mirrors: How to Use the Voice of the Customer to Write High-Converting Copy”

- 90% of copywriting is listening.

- The goal is to write copy that people see themselves in—their current self and immediate next self.

- Validation for copy: Could this be a breakthrough or bust? Is it pushing things enough to be a total breakthrough? Or absolutely awful?

- Go beyond standard sources of voice of customer data; better options are:

- Interview founders (the original “customers”);

- Thank-you page surveys;

- Usertesting.com;

- Mine sales calls;

- Mine support tickets;

- Mine Facebook comments;

- Mine online reviews.

- Founder interviews can find the story, the value proposition, and the big idea.

- Have the interview on video and record it (with permission).

- Transcribe the interview (rev.com).

- Print and read the transcript with a highlighter: What stands out? What’s different?

- Sales calls and demo recordings can plot communication sequences, hierarchies, and help make copy sticky.

- Gain insight into the flow of how your prospect is actually thinking.

- Watch their expressions as they see the demo.

- Skip to the parts where the prospect is talking.

- Watch for “documentary-style” moments. (e.g. If you give someone the transcript, can they act it out? That’s a good moment.)

- Watch for phrases like “I’m worried about…” and “Can you show me…”

- Tag what you find so you can use it in your copy (#objection #late-stage, etc.).

Carrie Bolton: “Get Real with Your Customers and Your Executives—How to Really Improve the Customer Experience

- The customer experience is the customer’s perception of their interaction with your business.

- Vanguard decided that focusing on customer experience would help them differentiate from their competitors:

- Set up experimentation around customizing and personalizing the customer experience.

- Ex. Customers go online to avoid calling—rebuilt page to make it more experiment-friendly and targeted reduction in customer call rates.

- How to make the case externally:

- Get competitive intelligence from your industry or best-in-class companies (e.g. USAA, CIGNA);

- Forrester Research;

- Customer experience blogs.

- How to make the case internally:

- Quantitative or qualitative research from your customers (e.g. digital analytics, market research).

- “Talk to the people who talk to the people.”

- Talk to your finance people: What performance indicators get attention?

- Tell Don’t Sell.

- When you “sell,” people see right through it .

- Customer survey and feedback can reveal honest and needed feedback to help know what to “tell” them.

Judah Phillips: “How I Learned to Stop Worrying about Machine Learning and Love AI”

- Artificial intelligence gives analysts the ability to look forward when making decisions—instead of in the rear-view mirror.

- We’re currently at the onset of Pragmatic AI (e.g. Siri, Alexa)

- AI is also entering the workplace (e.g. recommendation engines, chatbots, automated suggestions for collaboration).

- AI is anything that takes historical data (training data) and learns from the past performance of that data; typically, this is supervised machine learning.

- Deep Learning is the idea of neural networking. It’s an area of over-inflated expectations.

- Convolutional neural networks (CNNs) are used for image and video recognition.

- Recurrent Neural Networks are good for time-series data.

- Generative Adversarial Networks (GANs) are good at creating fake data and images from other data and pictures you train it on.

- What to do with AI:

- Predict churn;

- Identify which offers to send to an individual;

- Accelerate innovation;

- Personalize content;

- Account-based marketing;

- Algorithmic attribution;

- Forecast future lift;

- Predict blame.

- Understanding what the models do (not necessarily the underlying algorithms) and knowing when to apply them and how to interpret results are the skills analysts will need.

- Automated machine learning will help us solve the issue of having too much data and not enough time:

- Automated machine learning predicts in minutes with high accuracy.

- Historically that’s been expensive. Not anymore. Codeless AI will allow you to do that today.

Ton Wesseling: “Validation in Every Organization”

- Why our CRO jobs will die: Teams operate at different paces.

- Conversion teams: 6–8 week experiment cycles;

- Marketing teams: Prepare, campaign, prepare, campaign;

- Product teams: 2-week sprints.

- Conversion/Optimization teams might become a nightmare for marketing and product teams.

- Optimization teams have a lot of (too much) pride:

- They tell other teams what they are doing wrong.

- Can be self-righteous and overly critical.

- Optimization teams should have more humility.

- Why it might actually be a good idea to kill optimization teams:

- Are we optimizing leaky buckets? Product and marketing teams should be involved.

- The term “conversion rate optimization” doesn’t really describe what we do—we help clients achieve their business goals.

- Why are we always focusing on the web? Optimizing email, social, etc., is also optimization.

- Optimization is the KPI you’re trying to impact.

- This sometimes includes clicks, behaviors, transactions per user, etc.

- You should optimize for potential lifetime value; there should be universal KPIs that all teams optimize for.

- Optimization is all about effects—getting more results.

- How do we do this? All departments should work together to create a “validation center of excellence.”

- Enable evidence-based growth at the heart of the company—democratize research so that product teams don’t have to worry about statistics.

- Prioritization for implementation = Quality of Evidence x Potential Effect on Shared Goals.

- Don’t be a pusher; be an enabler.

Tammy Duggan-Herd: “Pitfalls of the Unaware: How the Misapplication of Psychology is Damaging Your Conversion Rates”

- Understanding human behavior is complicated, acting on it even more so.

- You can damage conversions, marketing, and your brand if you follow the wrong principles.

- The root of the problem is how research gets to the public:

- Starts with a researcher who’s under pressure to produce stuff that gets traction in media/academic journals.

- Scientific journals have a 70% rejection rate—very little makes it out.

- When something reaches publication, press releases focus on promotion, not accuracy.

- Media continues to stretch claims; bloggers make the problem worse.

- Ultimately, we consume on Twitter—20 pages reduced to 160 characters.

- It’s a game of telephone—best-case scenario is that the distorted information has no effect; worst-case scenario is that it generates the opposite effect.

- Pitfalls of the unaware practitioner:

- Oversimplification. Media simplifies results because they need to be concise, catchy; qualifiers and nuance get dropped.

- Overestimating the size of effects. Statistical significance does not equal practical significance—the magnitude of the effect.

- Overgeneralizing. We often disregard study limitations, which are necessary because most studies are with undergraduates in a laboratory (not representative).

- Isolating findings. Media treats single findings as definitive; no single study can say much on its own; additional variables could negate/reverse effect.

- You need to know how to avoid the pitfalls:

- Read the original study. What was actually found? What was the size of the effect? How was it conducted?

- Don’t get caught up in hacks.

- Test it for yourself. Be aware of how it could go wrong/backfire.

Brian Cugelman: “Consumer Psychology, Dopamine, and Conversion Design”

- Dopamine mythology claims that

- Dopamine is the pleasure or happiness neurotransmitter.

- Variable rewards are so powerful that users can’t resist them.

- Companies like Facebook manipulate people with dopamine.

- If these claims were true:

- Social media would be pure pleasure.

- We’d all be addicts, hooked by evil manipulators.

- Most of humanity would lack any self-control.

- In reality, dopamine makes people feel energized and curious.

- It provides an emotional reward that fades fast, leaving people dissatisfied.

- People habituate to triggers, which stop triggering dopamine.

- Dopamine rewards reinforce behaviors.

- Too little dopamine is associated with motor impairment.

- How do we trigger our audience’s dopamine? Provide a digital promise or surprise:

- Virtual welcome gift;

- Get-rich-fast offers;

- Mystery boxes;

- Auctions;

- Lucky draw;

- Ads: “What these child stars look like today”;

- BuzzFeed surveys/quizzes, e.g. Which dog are you?

- How do we use this in digital marketing?

- Visual hints of gifts and rewards;

- Mystery prizes;

- Editorial hooks;

- Value propositions;

- Benefit statements;

- Any hints of rewards.

- The brain habituates to old rewards (e.g. banner blindness).

- How do you overcome habituation?

- Offer more, better, bigger.

- Use novelty.

- Include surprises.

- Hold back the full story.

- Reduce outreach frequency.

- Add random gifts.

- Repackage today’s materials.

- Add innovations.

- Use variable rewards.

- Use uncertainty to your advantage:

- If you’re giving out something with shipping, use a random reward with boosted anticipation.

- Use expectation management, be straightforward, deliver on your promises, and you’ll have dopamine that’s well deserved.

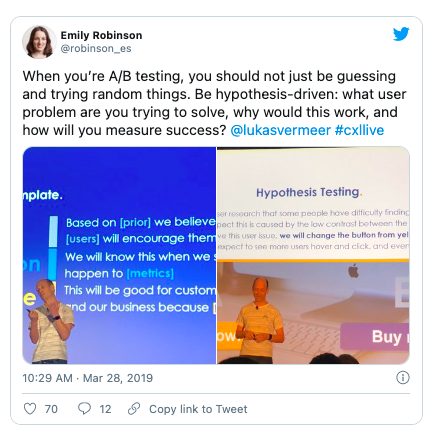

Lukas Vermeer: “Democratizing Online Experiments at Booking.com”

- Whenever someone shows you data, your first question should be “Where and how was this data acquired/collected?”

- Some people misunderstand data-based validation as a limitation to creative freedom.

- At Booking, there is trust in the validity of the data, and decisions are visible to everyone. This enables a continuous, individual decision-making process.

- “The plural of anecdote is not data”—we need evidence to make decisions.

- Avoid guessing games (e.g. “Which one of the two button colors is better?”):

- We should by doing hypothesis testing instead, which includes a much more detailed description of the thinking behind the experiment.

- It doesn’t mean you can’t test button colors but that you have a much better understanding of why you’re doing it and which variations you should test.

- Hypothesis template: Theory, Validation, Objective.

- Challenging your own understanding of the product via experimentation is critical:

- It flips the “all tests should win” thinking upside down—“9/10 tests fail” (VWO), but learning is never a failure.

- Find the smallest steps to test the riskiest assumptions quickly.

Ryan Thomas: “Optimizing for Email Signups”

- Email capture can sometimes work against your primary goals

- Ex. Optimizing a contest pop-up resulted in over 300% lift in email signups, but e-commerce conversion rate and AOV dropped

- The fix: replace the contest with an offer that encourages the sale now (combination of welcome and exit offer of a small discount)

- Similar uplift in emails signups plus a boost in transaction conversion rate and revenue

- Why focus on email signups?

- Look at the data: email traffic often performs best; Time Lag and Path Length example—two-thirds convert same day but less than half on first touch point

- Build a relationship with your customer

- Testing Strategy: Independent KPI (unlikely to conflict with other tests); Low Traffic: may be able to test here when you don’t have enough macro-conversions; Testing as learning: Try out messaging and motivation

- ResearchXL process

- Heuristic Analysis

- Mouse Tracking

- Web Analytics

- User Testing

- Qualitative Surveys

- Technical Analysis

- Customer surveys

- Open-ended, non-leading questions

- Find out about motivations, decision-making process, hesitations, frustrations

- Insights can come from anywhere

- Correlate data points to prioritize your roadmap (PXL)

- More examples:

- Optimizing a contest popup for a frequent purchase product resulted in a lift in email signups without affecting ecommerce metrics

- Adding a welcome offer where none existed before increased email signups 95% and created small lift in transaction conversion rate

- Takeaways:

- Align your strategy with what’s important to the business—no vanity metrics.

- Try out different tactics, tools, offers, and designs.

- Do your research!

Nina Bayatti: “Was It Really a Winner? The Down-Funnel Data You Should Be Tracking”

- There are tons of metrics you can monitor:

- Conversion rate;

- Bounce rate;

- Click-through rate;

- Pageviews;

- Lead captures;

- Purchase conversion rate.

- But they don’t tell the whole story.

- To reach confident conclusions, you need to analyze down-funnel data.

- At ClassPass, they see referrals as important for bringing in new clients, so it makes sense to incentivize referrals.

- They tested offering 10 free credits for working out with a buddy.

- Invites went up 50%; 35% lift in referral acquisition.

- Then they noticed that they’d been cannibalizing other channels—people converting as referrals were leads already acquired from other channels.

- Setting up your experiments for success:

- Define success metrics.

- Consider all funnel steps when determining sample size (i.e. make it large enough for bottom-of-funnel analysis, too).

- Identify and iterate down-funnel levers.

- Incentives work, but they might work too well and convert people who are not really into the service/product or cannibalize other channels.

- Always consider the impact of your winning tests on your growth and cost.

Eric Allen: “Losing Tests Can Be Winners, Too. How to Value and Learn from a Losing Experiment.”

- The cost of experimentation—you hope the upside outweighs the cost.

- Why does it hurt to lose? Losses are bigger in our mind than gains.

- Learning over knowledge: Knowledge is finite; design every experiment in a way that you can derive learnings, even from losses.

- Ancestry.com redesign test failures:

- First test: Learned we changed too much, needed to isolate variables.

- Second test: Consumers aren’t understanding the difference between packages and are just selecting the lowest price.

- Third test: The offer pages are too complex and consumers are spending too much time on the page.

- Fourth test: Too many people are taking a short-term package now.

- Fifth test: This just isn’t working. Revert to original.

- Learn to reframe loss: “A/B tests are our cost of tuition. It costs money to learn.”

- Impact of Testing:

- Baseline run rate: $100 million per year;

- Test lift: 10%;

- Test duration: 90 days (25%);

- Negative impact: $2.5 million;

- Total revenue: $97.5 million.

- Impact of Implementation without testing:

- Baseline run rate: $100 million per year;

- Test lift: 10%;

- Test duration: 12 months;

- Negative impact: $10 million;

- Total revenue: $90 million.

- Total savings with testing: $7.5 million.

- Learning from a series of tests can turn losses into a win.

- Takeaways:

- James Lind: There is a cost, but also an upside.

- Jeff Bezos: Keep running experiments.

- Jay-Z: Losses are lessons.

Stefanie Lambert: “Real Talk: Tough Lessons Learned When Building an Optimization Program”

- Sensitivity to organizational culture will make everyone’s life easier.

- If a company does things in a different way, it might mean you have to fit in.

- The devil is in the details.

- We needed to relaunch a test several times because of moving too fast.

- For a simple test, connecting Google Analytics with Google Optimize would’ve taken a couple seconds, but because we didn’t, we wasted two weeks.

- If it’s not backed by data, it probably won’t work.

- Your testing queue should be mostly data-backed tests.

- A clothing line really wanted to show the quality of their clothing and make their images larger. But the idea didn’t come from data.

- When we showed larger images, there were fewer products on the page, which lowered CTR.

- Quantitative and qualitative data are necessary for exceptional results.

- After rolling out a test, we were disappointed by a 20% decrease in form initiations.

- The new form was better-looking and had done well elsewhere on the site.

- We filtered session recordings for use cases of the control and the variation.

- In the new variation, the form distinguished itself enough to make the visitors recognize it as a form, so more users left, decreasing form starts.

- Curiosity didn’t kill the cat.

- Stand out by caring enough to ask hard questions. (e.g. “This is really good, but could it be better?”)

- When I started out, I felt uncomfortable basing large business decisions on data from a tool.

- I had to learn statistics to trust the data.

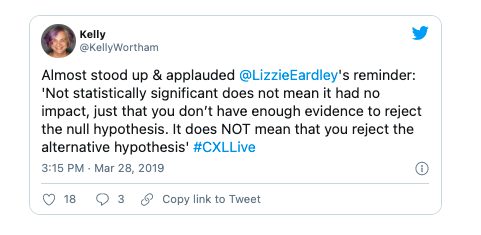

Lizzie Eardley: “Chasing Statistical Ghosts in Experimentation”

- Statistical ghost: When you think your test has impacted your metric, but in reality there’s no impact. You’re being fooled by the data

- Ran 100,000 A/A tests: 60% of the A/A tests measured a difference of at least 1%.

- “Not statistically significant” does not mean it had no impact, just that you don’t have enough evidence to reject the null hypothesis.

- Four causes of statistical ghosts:

- Multiple comparisons;

- Peeking;

- Bad metric;

- Almost significant.

- Multiple comparisons:

- Need to account for this by adjusting the p-value.

- The false positive chance applies to each comparison.

- Ex. 1 comparison: 5% chance of false positive; 8 comparisons, 34% chance of false positive!

- Base hypothesis on one key metric, then choose secondary metrics.

- Peeking:

- Looking at the data and taking actions before the pre-determined end of the experiment.

- This can have a huge effect on false positive rate.

- Good reasons to peek: Check for bugs, stop disasters, efficiency.

- Bad metric:

- A good metric is meaningful, interpretable, sensitive, fit for the test.

- Meaningful: Captures what you intended to change.

- Interpretable: Easy to tell how a change altered user behavior.

- Sensitive: Can detect smaller changes faster.

- Fit for the test: Normal tests assume independence and that error is normally distributed.

- Almost significant:

- The ghost of temptation. People want to believe what they are hoping for.

- There’s no such thing as “almost” significant!

Emily Robinson: “6 Guidelines for A/B Testing”

- Steps of the experimentation process:

- Process. You can’t do everything, and the things you’re not doing are still important.

- Creating.

- Analyzing.

- Decision Making. How to decide what to do next?

- The less data, the stronger the opinions. Our opinions are often wrong. Don’t let the HiPPOs (Highest Paid Person’s Opinion) kill your ideas; experiment instead.

- Start with historical data: What’s the population of your test idea? What’s your current conversion rate and estimated increase?

- Run power analysis! Important for determining the stopping point and avoiding a false negative. (Eighty percent means there’s an 80% chance you will detect the change if it’s there.)

- If you try multiple changes at once, it will be impossible to figure out what didn’t work; work on smaller incremental tests instead.

- Etsy started by releasing all changes after tests concluded.

- Moved to a more in-depth process for implementing A/B testing changes with smaller cycles.

- Prototype ideas before releasing them.

- Have a scientist involved who can make sure that you’re tracking the right metrics. They can also help you with power calculations and iterate ideas.

- Decision making:

- What is the technical complexity and debt you’re adding?

- Is it a foundational feature?

- Could there be a negative impact that’s too small to detect?

- Be careful of launching on neutral—not having a solid strategy or enough data to support decision-making.

Valerie Kroll: “How to Present Test Results to Inspire Action”

- When presenting data, the key question is “What do you want your audience to walk away with?”

- Template formula:

- Why we tested;

- What we tested;

- Outcomes;

- Learnings;

- What’s next.

- Your slides are not your presentation. You are the presentation.

- State your business case:

- Where are you testing this?

- Who’s the audience?

- What will you measure?

- State the thesis. (e.g. “Will a value proposition chat prompt increase lead capture?”)

- Define how the experiment was measured:

- Hypothesis statement;

- Primary KPI;

- Secondary KPIs.

- Make your presentations interactive: Run a poll—ask people what they think would win.

- Presenting results:

- Impact on the primary KPI;

- One visualization of primary KPI; people will understand your results better.

- Segmentation to show what else you found.

- Keep learnings and actions side-by-side. (This should take 40–50% of your presentation time.)

- If something isn’t adding to your presentation, it takes away from it (e.g. statistics, tech information, etc.).

- Have a predictable template. People know what to expect. Makes your work faster.

André Morys: “We’re All Gonna Die: Why ‘Optimization’ Is The Acceleration Of Evolution”

- Thatcher Effect (1980, live experiment): It’s difficult to recognize changes to an upside-photo of a face (Margaret Thatcher).

- If you don’t know the pattern, you might not recognize it—same holds for CRO.

- You need to change the perspective to see the truth behind things.

- Ex. Why is Commerzbank dying?

- Frankly, the experience sucks.

- But why? They have designers, CROs, analysts

- Ignorant HiPPOs—management does not feel the pain.

- “Truth—more precisely, an accurate understanding of reality—is the essential foundation for producing good outcomes.”

- Do not talk about disruptive business models; the ones who disrupt don’t talk about it. They are too busy disrupting.

- Digital growth does not come from technology; it’s based on great customer experience.

- If there is no intention to test the customer experience, you will not see results.

- Optimization is by nature agile: CRO yields new data for the team to prove that whatever the organization did was good or bad.

- Good optimizers generate ideas that are customer-centric.

- The Amazon advantage is they are continuously generating new insights.

- It’s a wave—the agile Tsunami. (You can’t see it.)

- Infinity Optimization Process: Analyze, Prioritize, Validate.

- Change the management mindset.

- Management doesn’t care about what changed on the website.

- Present the ROI of the experimentation program to management.

- Tip: Become friends with the CFO.

- C-Suite roundtable meetings—allow the management to discuss their problems and then be aspirational (i.e. sell the program).

- Management wants big things! However, they forget that smaller changes yield to larger results (compound effect).

John Ekman: “What’s Broken in Digital Transformation (and How to Fix It)

- “Digital transformation” is not a good goal:

- Goals are to “get the product to market fast” or “good customer service.”

- We (wrongly) set the goal of “digital transformation” when the goals shouldn’t change, only the tools to achieve them.

- The five ways of digital transformation:

- Digitize the product;

- Wrap a digital service layer around product;

- Digitize processes “behind the scenes”;

- Digitize marketing, sales, and retention efforts;

- Innovate new digital products.

- We must select and prioritize among the five ways of digital transformation; even digital leaders may excel in one or two ways but not others.

- Leadership thinks it’s spending tons of money; practitioners feel like they have no resources.

- The reality is that it’s hockey stick growth—you have to spend tons of money before you see any results (and then the results are exponential).

- Allocate budget to new initiatives before allocating to ongoing initiatives.

- Goals and evaluation misaligned:

- Small projects aren’t organized within the big picture.

- With digital transformation, we don’t know the scale of the return or necessary investment; looking only at ROI doesn’t propel you into the future.

- Solutions: OKR (Google), innovation accounting (Eric Ries), metered funding (VCs).

- Three digital superpowers:

- Ability to (1) listen to customers;

- When you listen, you can (2) act; otherwise, you act on the wrong info.

- If you have both, you can (3) scale; otherwise, you scale the wrong thing.

Will Critchlow: “What If Your Winning CRO Tests Are Screwing Up Your Search Traffic? Or Your SEO Changes Are Wrecking Your Conversion Rate?”

- The general worry is that SEO is going to screw up CRO, not vice versa.

- CRO deals with bottom of the funnel (more people converting to sales).

- SEO deals with top of the funnel (adding more people to the funnel).

- Lots of CRO pages are even not indexed, but many are—and CRO tests can hurt organic traffic. (We’ve seen it happen.)

- Experiment: SEOs and non-digital marketers were asked to evaluate which of two pages might rank higher:

- No one managed to reach 50% accuracy in the prediction.

- SEOs were only marginally better than non-SEOs.

- So how do we make better predictions? SEO testing (DistilledODN).

- Changes that work for one niche may not work for another—we have to test.

- SEO “best practices” are site/industry-specific.

- UX is a ranking factor (maybe):

- Google trains machine-learning models to like the same things that people like.

- But Google isn’t perfect—every algorithm change doesn’t achieve that goal, but that’s what they’re trying to do .

- Thus, we should be building SEO hypotheses from UX fundamentals.

- Ultimately we need to—and will benefit from—testing the impact of SEO and CRO at the same time. We’re on the same team.

Brennan Dunn: “How To Deliver Fully Personalized Experiences At Scale”

- Many people “think” they personalize, but there’s a lot of non-useful personalization out there.

- Things I actually want to be personalized on: my intent, my actions, my knowledge level.

- Two primary jobs for segmentation:

- Making your message more relevant, more specific;

- Using segmentation to improve reporting.

- In segmentation, the two things I care about are the “who” and the “what”: “I am a [blank] and I need your help with [blank].”

- How to automatically segment people:

- Intent/Behavior

- What are the last 10–20 articles someone read on our site?

- Original landing pages.

- Ad they clicked (especially true for Facebook Ads).

- Referrals.

- Actions

- Purchases.

- Lead magnets.

- Webinars.

- Surveys

- Trigger links: “What are you most focused on right now?”

- Surveys. “What are you looking to do on our site today?”

- Hosted surveys.

- Clearbit

- Intent/Behavior

- What if you don’t know how you should segment?

- When someone joins an email course or downloads a lead magnet, ask them point blank. (e.g. “What do you need to get from this email course?”)

- With personalization, we can use niche messaging without actually being a niche business.

- Less thinking, more engagement = more conversions.

- At the end of the day, personalization is relevance.

Chad Sanderson: “Aligning Experimentation Across Product Development and Marketing”

- Sometimes marketing and engineering departments want to do parallel experiments and run into conflicts.

- People closer to the product have more pull in the business.

- Different types of companies: Tech First (Bing, LinkedIn), Second (Booking.com, Grubhub), Third (Sephora, Target).

- Depending on the business type, you have differences:

- Optimization. The feature would not exist without experimentation tool; experiment design can occur out of sprint cycle.

- Validation. The feature would exist regardless of experimentation; experiment design is part of development cycle.

- Snapchat’s big redesign was bashed in 83% in user reviews—an example of a catastrophe that a validation-based process could’ve avoided.

- Page Speed Kills. Is optimization killing conversion rates by 5% or more? Client-side experimentation technologies fail to increase latency by anything less than 1000ms. Every 100ms latency causes a 0.5% decrease in RPV.

- Steps to success:

- Understand your current structure: optimization or validation.

- Figure out where your coverage is lacking. Is ROI tracked? Is product shipped with experiments?

- Bridge the gap across people, departments—get mutual goals for program metrics.

- Establish a forum for sharing results and working on joint projects (results at a global program level, not individual tests).

- Monthly meetings to review metrics consistently and solve opposing forces.

Natasha Wahid: “How to Get Your Entire Organization Psyched About Experimentation”

- Culture is a factor of successful experimentation. Take this scenario:

- A one-woman optimization champion starts sourcing ideas from everyone for experimentation.

- She gets tons of ideas, but because she’s a one-woman show, she gets snowed under.

- After a time, it becomes a joke—a place where ideas go to die.

- How do we do better?

- Inspire—the spark. Get people motivated to act.

- Educate—training. Formal or informal.

- Inform—communicating knowledge, action.

- The core team owns the program. They’re focused on getting executive buy-in and building momentum.

- Ex. Envoy

- Michelle highlighted what the company was missing by not optimizing their core funnels.

- Recruited a lead engineer and a designer. They also brought in an enabling partner from an outside agency.

- Everyone could see the impact of the experiment. The engineer hardcoded the winning variation right away.

- Ex. Square

- One of the highest visibility product teams had gone through a redesign that tanked.

- Hosted workshops that focused on changing people’s mindsets around experimentation.

- Focused on fostering collaboration, asking teams if there were insights from other experiments that might be relevant for the current team.

- RACI model:

- R – responsible – doing the actual work;

- A – accountable – owner of the project;

- C – consultant – providing information on managing the process;

- I – information – people who just need to be kept informed.

- Example for RACI communication:

- Owner: Experimentation champion;

- Message: Experiment X has been launched;

- Channel: Slack notification;

- Audience: Engineering team;

- Timing: Automatically when experiment is launched in tool.

Conclusion

This post is 21 half-hour sessions condensed into less than 5,000 words. If you want the full experience—inside and outside the conference sessions—you simply have to be there.

The good news? You don’t need to wait a full year. Join us at Digital Elite Camp in Estonia on June 13–15.

List segmentation has to be one of the ways that I’ve found the majority of companies go wrong in their approach.

It’s important to not just follow up differently for different leads or customers, but to also follow up in a variety of ways for each of those different leads and customers.

If a lead sees sales page A) and they don’t convert. They shouldn’t see sales page A more than maybe 2/3 times more. Then if they still don’t convert, a specially designed sales page B should be made for those who didn’t buy through sales page B) from a different angle that has also been customized per the experience.

There is so much more than what meets the eye into how to raise conversion effectively.

Businesses and large companies simply need to dig deeper, and to create the best personalized customer experiences possible, and they’ll develop quite quickly and ever expanding brand that is loved.