At a certain point, the results from your A/B testing will likely slow down. Even after dozens of small iterations, the needle just won’t move.

Reaching diminishing returns, is never fun. But what exactly does that mean? In most cases, you’re probably hit a local maximum.

So the question is, what do you do now?

If you’ve hit a local maximum, it doesn’t mean you should just give up. You can absolutely still get better conversion rates. While it’s tough to do, it’s not impossible. But first, you must understand how the local maximum affects yours efforts moving forward.

Table of contents

What is a local maximum?

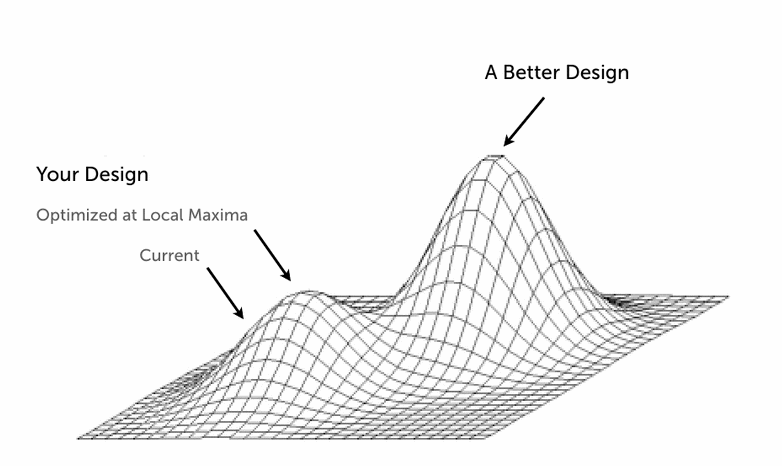

A local maximum is a point in a mathematical function where it reaches its maximum value within a given range.

This point is the local maximum for the function, although it may not be the maximum for the whole funciton. It can be found by calculating the derivative of that function.

Now, let’s apply this definition to a local maximum in A/B testing. Despite your evolutionary design approach and running many small changes and tests, there comes a time when your gains are virtually non-existent.

That point is called the local maximum. If you’ve got a Computer Science background, you’ll recognize the hill climbing algorithm:

In essence, this is the definition of a local maximum: when you hit the peak of your tests. At this point it can’t get much better—even if you make a thousand small tweaks, you can only improve so much.

Eric Ries summed up the problem well:

“It goes like this: whenever you’re not sure what to do, try something small, at random, and see if that makes things a little bit better. If it does, keep doing more of that, and if it doesn’t, try something else random and start over. Imagine climbing a hill this way; it’d work with your eyes closed. Just keep seeking higher and higher terrain, and rotate a bit whenever you feel yourself going down. But what if you’re climbing a hill that is in front of a mountain? When you get to the top of the hill, there’s no small step you can take that will get you on the right path up the mountain. That’s the local maximum. All optimization techniques get stuck in this position.”

It’s tough to tell if you’ve hit a local maximum, because you can’t necessarily see the global maximum. In fact, the problem has been referred to as, “climbing Mount Everest in a thick fog with amnesia.”

So what do you do?

Why does local maximum occur?

You can reach the local maximum for any number of reasons. It could be that your overall website design is a constraint (looks like Grandma designed it). Or it could be that you’re relying too much on iterative A/B testing, as Joshua Porter mentioned in this 52 Weeks of UX post:

“The local maximum occurs frequently when UX practitioners rely too much on A/B testing or other testing approaches to make improvements. This type of design is typified by Google and Amazon…they do lots and lots of testing, but rarely make large changes.”

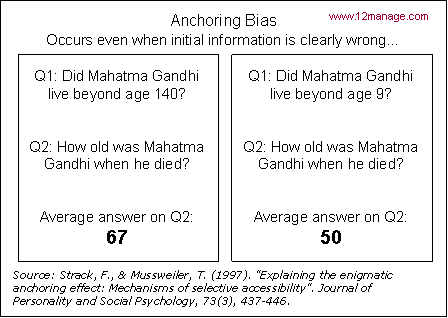

Another psychological underpinning to the local maximum problem is anchoring, which is the human tendency to rely too heavily on the first piece of information offered (the ‘anchor’) when making decisions.

Anchoring limits

During decision making, anchoring (also referred to as focalism) occurs when individuals use an initial piece of information to make subsequent judgments.

You might be obsessed with testing static images against sliders on the home page, or testing 13 different call to action button colors, thinking that’s where the problem is since you saw that mentioned in user testing.

Sometimes you can optimize locally (get the button right), but if you’re near a local maximum, you need to push larger changes. If you’re anchored, you are limited by that initial piece of data and might never try a radical redesign or innovative testing.

How to move past the local maximum

If your small changes are failing to move the needle, then you need to focus on making big changes in the right places. To make larger gains you have to try more radical experiments. Generally, there are two ways of pushing past the local maximum, both of which are predicated on running larger and more experimental tests than innovative tests:

- Innovative Testing;

- Radical Redesign;

Iterative vs innovative testing

CRO agency PRWD groups A/B testing into two types: innovative and iterative testing. Innovative testing is a sort of a middle ground between the extremity of radical redesign and the conservatism of iterative testing. So instead of a radical design, you can do innovative testing.

Iterative testing

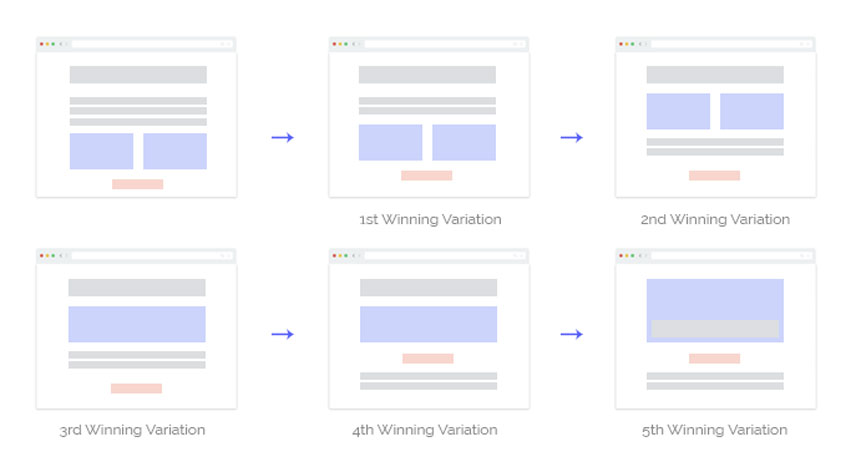

Iterative testing is the kind you’re familiar with. It’s the type that most tests today are. Iterative testing changes small things, like headlines, copy, images, CTAs, etc, in an evolutionary way.

Of course, iterative testing can produce admirable results. It’s all about continuous iteration and improvement, small changes that lead to compound growth. Here’s a visualization of iterative testing from Experiment Engine:

The benefits of research-driven iterative testing are many:

- Quick wins and low hanging fruit;

- Get buy-in from your organization by demonstrating ROI;

- Develop a culture of testing and optimization;

- Learn to use testing tools, and learn about the optimization process in general;

- Build momentum toward larger changes.

In fact, one of the biggest benefits is organizational. As Matt Lacey wrote, iterative testing allows you to “iron out your process and get some early wins (and support) while you build up to larger, more innovative testing.”

On the other hand, innovative tests have both more potential impact and more risk, “which is why it’s advisable to start by running iterative tests,” according to the PRWD article. “This will allow you to perfect your process and begin gathering learnings and insights about your visitors, which can then feed into future innovative tests.”

Innovative testing

Innovative testing is an essential part of a holistic growth process. Once you’ve built culture of testing with iterative testing, it might be time to shift up into more innovative tests. As Paul Rouke from PRWD said, “intelligent, innovative testing is essential in exploiting your growth potential.”

If iterative testing involves making small changes, innovative testing changes the big shit. These are things like:

- Full homepage redesigns;

- Full landing page redesigns;

- Changing a business proposition;

- Reducing steps within a flow.

Innovative testing (or radical testing) is often predicated on more qualitative data, as well a validated learnings from your iterative testing. It’s used to create huge business impact, not just optimize small tweaks on your website performance.

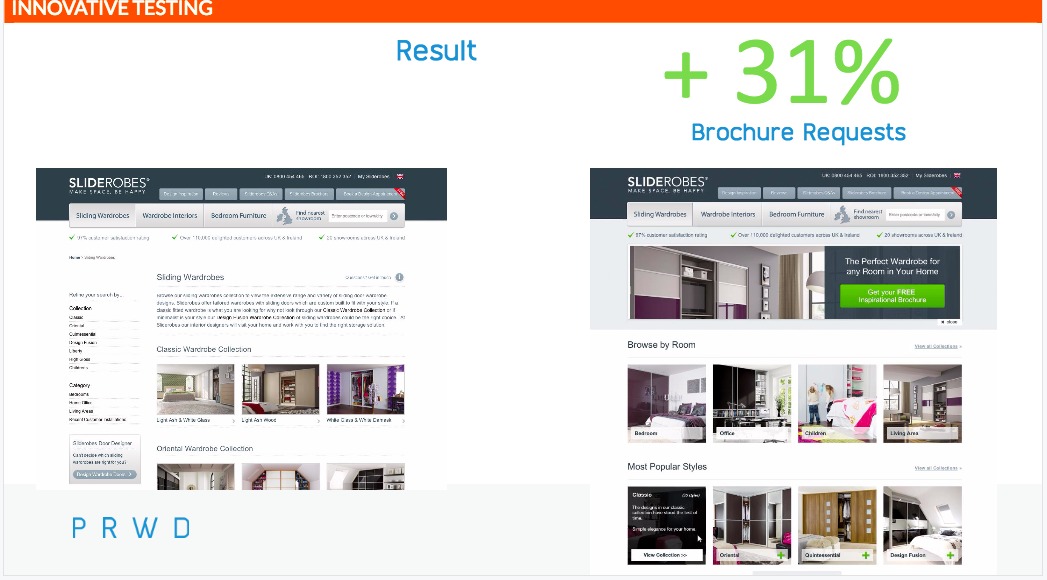

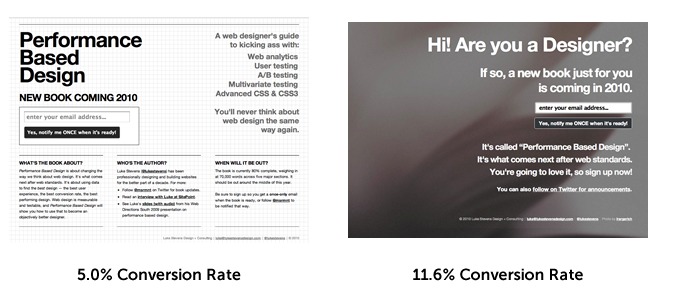

Here’s an example of innovative testing from PRWD:

Joshua Porter also gives the example of a page-level design test in a HubSpot article:

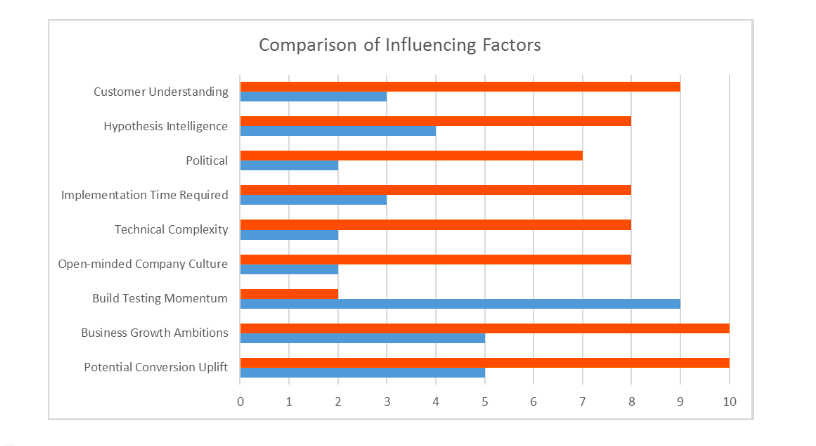

As you’ll see from the chart below (put together by PRWD), innovative testing (represented by orange) relies on a different set of influencing factors than iterative testing.

First, the resources required are greater. That includes factors like implementation time and technical complexity, but it also deals with politics and hypothesis intelligence (contingent on a good bit of research and customer understanding). The design and development costs of innovative testing also run higher.

Of course, you’ll also note that the business growth ambitions and potential conversion uplift of innovative testing are also huge compared to iterative:

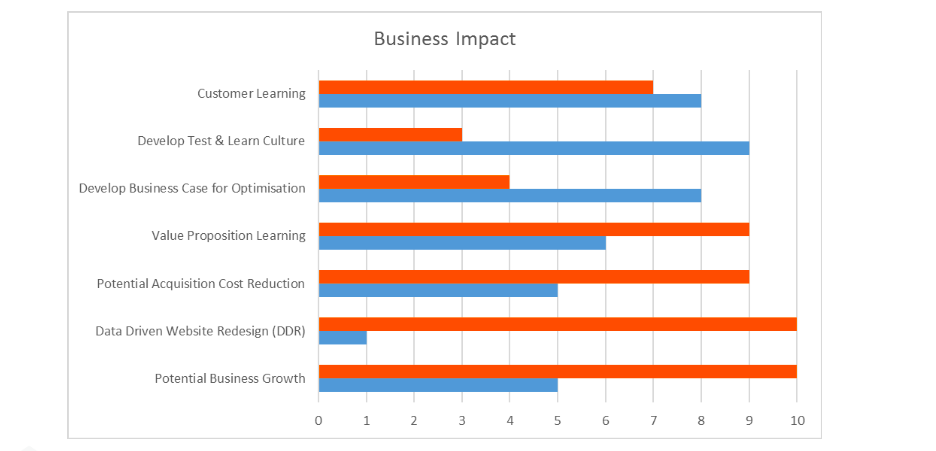

Innovative testing leads to significant lifts. Once you’ve reach a local maximum, you can use it to shift user behavior dramatically, as well as quantify the major changes you’ve made.

It’s also fantastic for business proposition evaluation and value proposition learning (and the potential to reduce customer acquisition cost):

Specifically in the case of startups, Eric Ries is a proponent of innovative testing. Though you can learn a ton from intelligent, hypothesis driven iterative testing, Ries believes you can learn more from more radical tests:

The right split-tests to run are ones that put big ideas to the test. For example, we could split-test what color to make the “Register Now” button. But how much do we learn from that? Let’s say that customers prefer one color over another? Then what? Instead, how about a test where we completely change the value proposition on the landing page?

Eric Ries

The caveat is that innovative testing requires a bold and open-minded organization. This also leads to the difficult question of, “how do you generate such bold hypotheses as required by innovative testing?”

Blending data and bold design decisions

Innovative testing requires bold ideas and daring decisions, and we can’t rely exclusively on data to drive our innovative tests. 52 Weeks of UX article summed up the balancing act well when they said the following:

“In order to design through the local maximum we need a balance between the science-minded testing methodology and the intuitive sense designers use when making big changes. We need to intelligently alternate between innovation and optimization, as both are required to design great user experiences.”

An article on 90 Percent of Everything agrees, saying that the analysis that gets you out of a rut tends not to be data-driven, and that a creative leap is necessary to reach new heights.

UI Patterns also echoes this, saying that while optimization is done with a data-driven approach, innovation is done with intuition. Whatever the case, when the time comes to make bigger changes—when you decide to jump from your local maximum to another possibility—make the decision with conviction.

On the other hand, jumping blindly into innovative changes without relying on user understanding or previous data would be stupid. After all, if you’ve run enough iterative tests to hit a local maximum, you’ve surely learned some customer insights along the way.

Paul Rouke mentioned at Elite Camp 2015 that the 2 biggest influencers of innovative tests are:

- In-depth user understanding;

- Meaningful insights from prior tests.

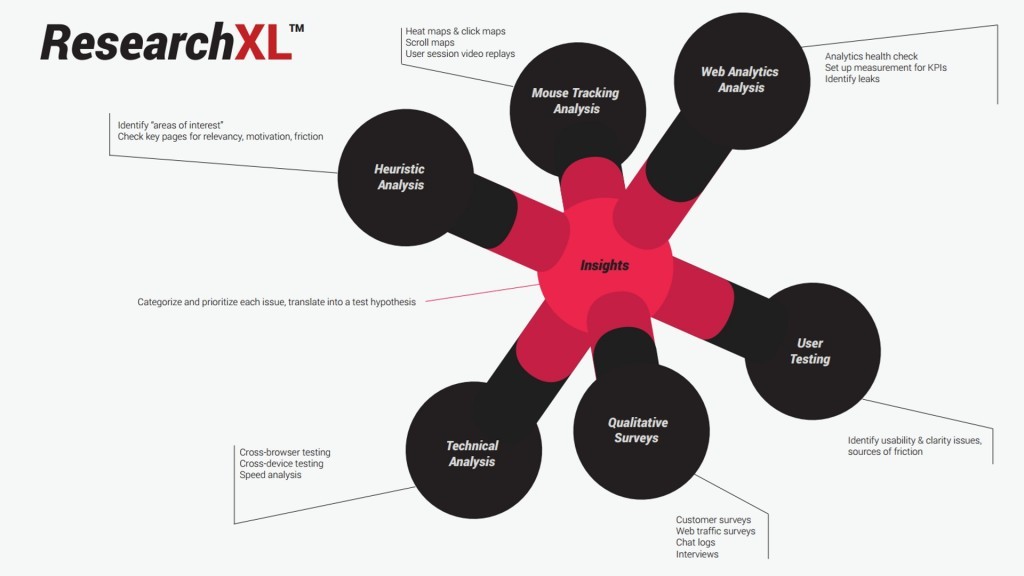

This means that you don’t have to rely solely on a designer’s intuition. In fact, in-depth user understanding comes from a variety of measures, qualitative and quantitative (check our article on the ResearchXL model), and your prior tests should be taken into account. Andrew Chen also explained that quantitative data may have limitations in concern to reaching a higher local maxima; qualitative data can sometimes give a clearer picture of innovative testing necessary to reach the next level.

All of that qualitative data may give you insights and ideas, but it’s still up to your and your team to come up with creative innovative tests.

You end up doing lots of user interviews, conducting ethnographic studies, and other methodologies that generate lots of data, but it’s still up to you as the entrepreneur to figure it out. Not easy!

Andrew Chen

Now, I mentioned above there was another way to push past a local maximum: radical redesign.

What about radical redesign?

Radical redesign is essentially another type of innovative test. It’s a redesign of your entire site, and it can be quite risky.

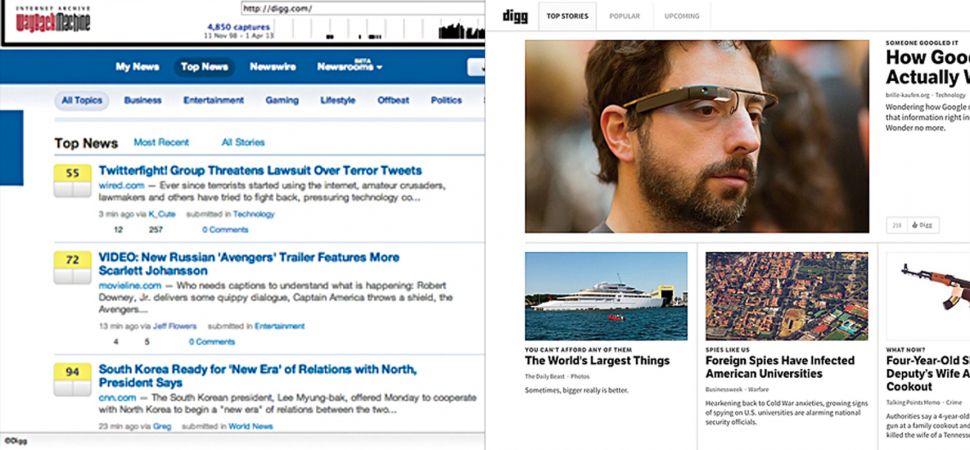

There are, of course, documented horror stories of radical redesigns—like Digg losing 26% of its traffic. Or when Mark & Spencer spent 2 years and £150m designing a new site, only to see its online sales plunged by 8.1% in the first quarter following the launch of its new website.

So it’s something to be careful about. NNGroup offers a reasonable guide for when to choose radical redesign or incremental change. They, too, mention that you should tread carefully, but they mention a few specific scenarios in which redesign would be appropriate:

- The gains from making incremental changes are miniscule or nonexistent (ie local maximum.)

- The technology is severely outdated, making critical changes impossible.

- Architecturally, the site is a tangled mess.

- Severely low conversion rates site-wide.

- Benchmarking research reveals your site is far inferior to the competition.

So if it is a site redesign you need, then there are ways to do it correctly.

How to do radical redesign correctly

Since you’re changing everything at once, it’s inevitable that some things will get better and some things will get worse—you can’t be totally sure how it will work out. You could hit the jackpot, or you could spend a bunch of money and end up with lower conversion rates.

Don’t be misled: in most cases, radical redesigns should be avoided. Not only are the odds against you , but redesigns can be a resource black hole. The development and design takes much longer, and therefore costs much more.

Conduct full conversion research

Conducting full conversion research will let you know what’s working well and what’s not, and you can redesign from there. Keep the parts that are working (just polish them up, improve the look and feel). Completely re-think things that suck (e.g. a product page with less than 2% add to cart).

Also, figure out the ‘why.’ Try to answer these questions:

- How are people using the site?

- What are people trying to accomplish?

- What are their higher-level goals?

- What aren’t people doing that we want them to?

- What causes friction?

We’ve written a lot on the research process before, so I won’t repeat that here. But check out our ResearchXL framework if you’re interested in how we do it.

Radical redesigns require daring design decisions

Redesigns are not just about data. As mentioned above in reference to innovative tests, it’s next to impossible to enact new designs solely based on data. Redesigns brings forth changes that are much larger than a single design element you can effectively test, so making a change to them requires making a daring design decision.

Optimizers and designers have to work together to take a chance based on the data they have available + their intuition. What they think will work based on what they know about the business and their customers.

Eric Ries hammered on that point in his article as well. The key, he says, is to get new designers comfortable with data-driven decisions and testing as soon as possible.

It’s a good deal: by testing to make sure (I often say “double check”) each design actually improves customers lives, startups can free designers to take much bigger risks. Want to try out a wacky, radical, highly simplified design? In a non-data-driven environment, this is usually impossible. There’s always that engineer in the back of the room with all the corner cases: “but how will customers find Feature X? What happens if we don’t explain in graphic detail how to use Feature Y?” Now these questions have an easy answer: we’ll measure and see.

Conclusion

If you’ve hit a point of diminishing returns and can’t seem to move the needle no matter what, it’s not a given, but it’s possible you’ve hit a local maximum. In that case, you’ll need to get bold, make some daring decisions, and blend innovation with optimization to climb a taller hill.

As for when to choose radical redesign vs. innovative testing, it’s not always clear. It’s not a this vs. that thing as much as it is a calculated decision based on your specific circumstances. In general, radical redesigns are riskier and you should tread carefully.

No need to tread blindly, however—you can use past insights, qualitative data, and customer research to form hypotheses. Finally, just because you’ve taken some larger leaps with innovative testing or a radical redesign doesn’t mean that iterative testing is over. Blending innovation with optimization is best.

Alex, thanks for an excellent, thought provoking article. Thanks also for referencing some of PRWD’s slides and thoughts on this crucial subject for businesses who actually want to grow through optimisation.

Three additional insights from me on this:

> Almost all businesses stay in the safe zone and do simple, iterative testing the vast majority of the time (and a lot of these tests lack a reason why)

> Businesses who try to do bolder tests don’t support the hypothesis with a combination of rich user insights and prior testing insights

> Businesses who are exploring what we call “the full spectrum of testing”, but working up to it rather than diving straight in to big bold tests with weak hypotheses, are in the very elite of businesses out there, and they have the biggest growth potential

The examples you shared are from one of our clients Sliderobes – the result of exploring the full spectrum of testing with them? Primary conversion metric (brochure requests) increased by 108%, and even more significantly based on their huge PPC and ad spend each month, acquisition costs decreased by 48%.

We have a 3 minutes video case study here – http://bit.ly/sliderobes

Hopefully Alex your article encourages more businesses to spread their wings and exploit all the opportunities that testing creates!

Thank you and PRWD for providing great resources on getting over local maxima! Case study was fantastic.