User experience is a nebulous term. What defines a “good” UX from a “bad” UX, and what do the gradations look like between the two poles?

The challenge comes in testing and measuring UX. Can you do it in a rigorous way, and are there tools that can help you?

Yes you can, and yes there are. That’s what this article is about: measuring and testing UX. If you’re an experienced UX or conversion optimization person, this might be basic to you, but it will be a good overview for those new to the field.

Table of contents

What is UX testing?

User experience testing is the process of testing the usability and functionality of a website or an app, and all its elements that affect user experience.

UX testing involves the aggregate and subjective experience of using a product such as a website or app. It’s more than just ensuring good visual design or strong usability metrics.

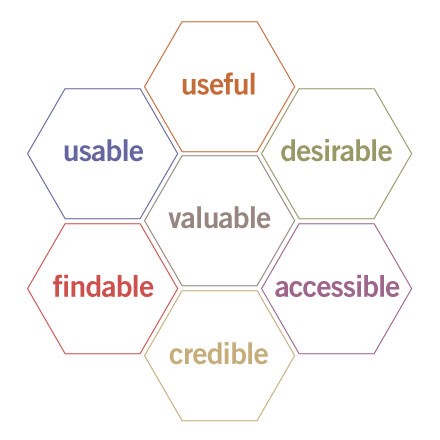

In fact, Peter Morville developed the user experience honeycomb to describe all the facets of UX:

Here’s what it all means:

- Useful. Is your product or website useful in any way? The more useful, the better the experience.

- Usable. Ease of use. If it’s too complicated or confusing to use, you’ve already lost. Usability is necessary (but not sufficient).

- Desirable. Our quest for efficiency must be tempered by an appreciation for the power and value of image, identity, brand, and other elements of emotional design.

- Findable. We must strive to design navigable websites and locatable objects, so users can find what they need.

- Accessible. Just as our buildings have elevators and ramps, our websites should be accessible to people with disabilities (close to 20% of the population). Today, it’s good business and the ethical thing to do. Eventually, it will become the law.

- Credible. Websites need to be credible—know the design elements that influence whether users trust and believe what we tell them.

- Valuable. Our sites must deliver value to our end-users. For non-profits, the user experience must advance the mission. With for-profits, it must contribute to the bottom line and improve customer satisfaction.

There are user experience testing methods designed to heed all of these variables.

11 methods to conduct User Experience (UX) testing

Here’s a short list of common user experience testing methods and their characteristics. All of these can fall broadly under the term “user testing,” though that has taken a more specific connotation with remote user testing softwares proliferating.

Moderated usability testing

User testing is basically testing a product or experience with real users. Users are traditionally presented with a series of tasks to complete and measured on their effectiveness doing so.

There are two main types:

- Moderated;

- Unmoderated.

Moderated user testing is when the user is being directly observed by a researcher in real time. They could be in-person or remote, but they are following the user as they go through the tasks.

In a moderated user test, you can guide users through tasks, answer questions, and hear their feedback in real time. A moderated user test has the benefit of increased flexibility and connection, but it has the downside of possibly being influenced by bias.

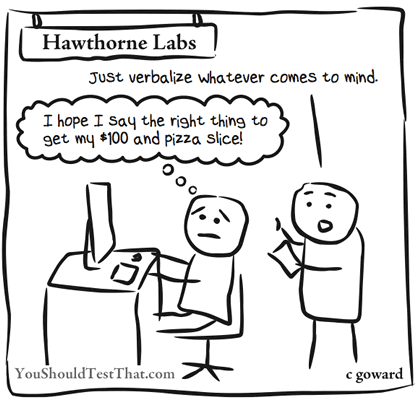

One main source of bias here is the Hawthorne Effect.

Chris Goward of WiderFunnel describes how the Hawthorne Effect can affect user testing:

Chris Goward:

“The act of observing a thing changes that thing. When people know they’re being observed, they may be more motivated to complete the action.

For example, knowing that you’re looking for usability problems in a user testing scenario, they may be more motivated to find problems whether or not they’re actually important.”

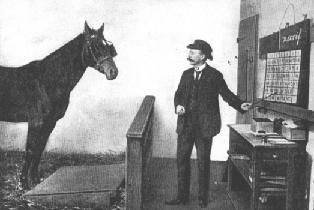

And if you’ve read our article on bias in qualitative research, you know about Clever Hans and the Observer-Expectancy Effect. Basically, this is when a researcher’s cognitive bias causes them to subconsciously influence the participants of an experiment.

In any case, you have to tread carefully here or skew your results by accident.

Unmoderated user testing

Unmoderated user testing is, of course, very similar to the moderated kind, yet it’s done by prescribing a predetermined list of tasks for a user to complete and letting them complete it on their time.

The pros are many with this format: it’s cheap, it’s scalable, and you can’t really bias the results.

But there are some cons as well.

Unless you are very conscientious in recruiting user testing candidates, you can get some bad quality user tests, leading you to believe incorrect things about your user experience. The out-the-box testers on common platforms are also used to getting paid to do this, and they go through many user tests, so they aren’t your prototypical traffic.

Quick tips for user testing

Therefore, when doing unmoderated user testing through sites like UserTesting or TryMyUI, you need to keep a few things in mind:

- In most cases you want to include three types of tasks in your test protocol:

- A specific task;

- A broad task;

- Funnel completion.

- Your testers should be people from your target audience.

- It should be the very first time they’re using your site.

- In most cases, five to 10 test users is enough.

- Usually, what they do is more important than what they say.

You should conduct user testing every time before you roll out a major change (run tests on the staging server), or at least once a year.

Five-second tests

This is really another branch of user testing, but it’s pretty unique so I consider it a category of its own.

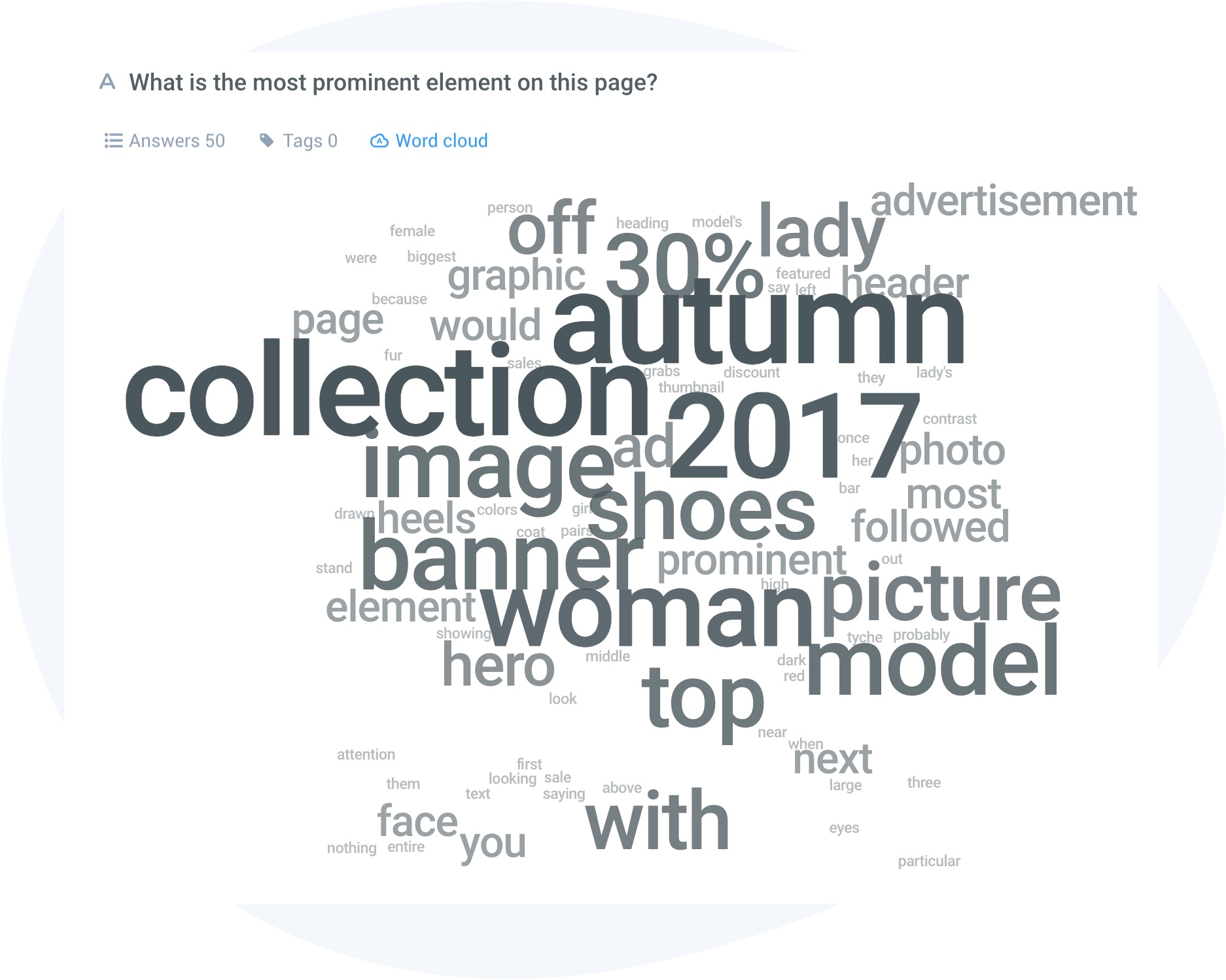

It was popularized by UsabilityHub, and the concept is simple: optimize the clarity of your designs by measuring people’s first impressions.

All you do is upload a design (homepage designs, landing pages, logos, brochures, and marketing material) and show it to people for five seconds. When the time is up, they’re asked questions about what they remembered.

It’s a great test for clarity, and you get a nice world cloud to show your design team or boss.

If there is a large mismatch between your intent and your results, you likely have a clarity problem.

Card sorting

Card sorting is a UX research strategy popular for information architecture. In a card sorting session, “participants organize topics into categories that make sense to them and they may also help you label these groups.”

You can do these in-person with actual cards or pieces of paper, or more commonly, use software to do so.

This technique relates specifically to how you organize and label a website for navigation. It lets you see how users think about your site and the optimal way to sort it for better UX.

Session replays

We wrote a whole article on the proper way to analyze session replays.

A session replay is essentially the ability to replay a visitor’s journey on a website or within a web application. You get to watch how anonymous visitors interact with your interface, which has a big benefit compared to user testing: users don’t know you’re testing so they act normally.

They’re a super popular type of user experience test for a few reasons. The technology allowing you to collect this data is getting better and better, now allowing for auto-flagging of user frustration signals and segmenting based on events, traffic sources, goals, and so on

They’re also remarkably popular because, like digital analytics, it’s a form of passive data. It’s collected without any effort on your part, and all you need to do is analyze it for insights. Just throw a javascript snippet on your site, and you’re on your way.

But there are still many problems with session replays: they’re time-consuming, easily influenced by bias in analysis, and the insights can be ambiguous.

Session replays can be incredibly illuminating but shouldn’t be relied upon as a sole source of data. Make sure you’re A/B testing any insights you may think you have gained from there.

Voice of customer

Voice of customer (VOC) refers to the process of discovering the wants and needs of customers through qualitative and quantitative research. It’s essentially the in-depth process of capturing a customer’s expectations, preferences and aversions—in their own words.

A paper by Griffin and Hauser in Marketing Science (1993) defined four aspects to take into consideration when conducting voice of customer research:

- Customer needs described in the customer’s own words;

- Hierarchical grouping of needs;

- Prioritization of needs;

- Segmentation of needs and perceived benefits by audience.

Voice of customer research can be an incredibly powerful vehicle for copywriting insights, in addition to general user motivation and user experience insights.

There are many ways you can collect this data, from customer surveys to on-site surveys, but it’s most important to make the data actionable. Some of my favorite tools for collecting this data are Hotjar, Qualaroo, and Usabilla, in addition to the myriad of custom survey tools like SurveyMonkey and Typeform.

Attitudinal surveys

A common way to find insights on user experience: ask your customers.

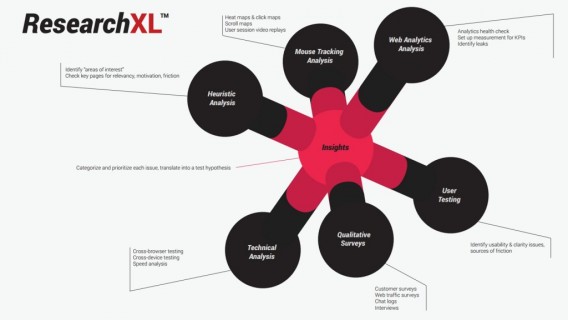

Customer surveys are an integral part to the ResearchXL conversion research process.

There’s been so much written on surveys, from survey response scales to NPS and more. I won’t do a deep dive here, but there’s a lot to be gained if you do it right (and there are some pretty big ways to do it wrong).

Measuring satisfaction

Within a user test or survey, there are a few ways you can measure discrete instances of satisfaction or frustration. I wrote an article about that a while ago. Here are 8 measurement tools I outlined:

- After Scenario Questionnaire (ASQ);

- NASA-TLX;

- Subjective Mental Effort Questionnaire (SMEQ);

- Usability Magnitude Estimation (UME);

- Single Ease Question (SEQ);

- System Usability Scale (SUS);

- SUPR-Q;

- Net Promoter Score (NPS).

These get a lot more specific and quantitative. Despite sometimes small sample sizes, you can calculate confidence intervals, and with some of them, compare your metrics to a database of other companies and competitors.

This is pretty complicated stuff if you’re just starting out into UX research and UX testing. If you’re a beginner, do some user tests and analyze session replay videos. If you’re ready for more, read the rest of my article on these deeper dives.

Eye tracking

Eye tracking studies are another way to see how users interact with your site. You can do eye tracking as part of a remote or an in-person user test.

Basically, you’ll have users interact with a site as they normally would, or complete a series of tasks, and eye tracking software tells you where they focused most and what order their gaze path follows.

You can use eye tracking on prototypes or early versions of a UI to mitigate risky mistakes and cheapen the cost of development. You can also use them as conversion research to develop test ideas and hypotheses.

Overall, integrating eye tracking and visual engagement research into your optimization process gives you an edge that most people aren’t even considering yet.

Read more on eye tracking in this article.

Biometric and advanced emotion tracking

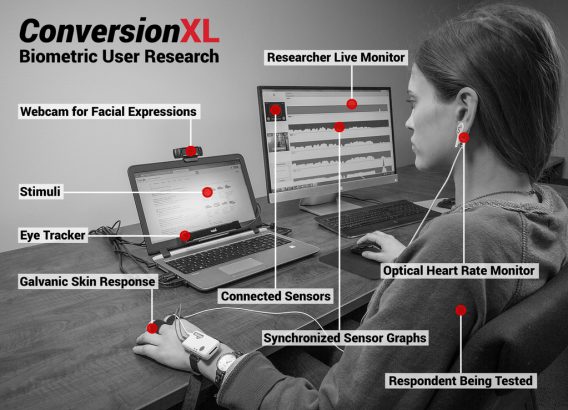

Biometric user research shows you how users feel, not just how they say they feel.

It’s a bit complicated, but basically what this type of research does is provides insights into the effectiveness of a site’s architecture, organization, motivating factors, and content.

It measures biometrics, relating to the application of statistical analysis to biological data like heart rate, facial expressions, and pupil dilation. This gives you context into the emotional reactions of your visitors to specific experiences.

It’s not for beginners, but biometrics research can be very valuable, especially for costly endeavors like new sites or advertisements.

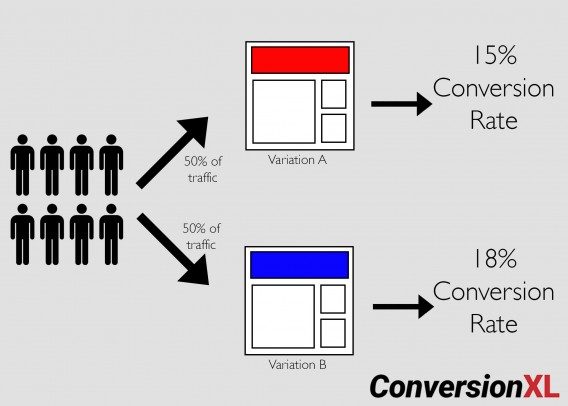

A/B testing

You’re usually not optimizing for something like “user experience” when you run an A/B test, but a controlled experiment (another term for an A/B test) is really the only way to tell that the changes you made are making a positive difference in the metric that matters.

You might improve user satisfaction metrics with a set of changes, but revenue per visitor goes down. Why? Who knows.

All of the above user experience testing and measurement methods are valid and valuable. But it’s important to note that, if you have adequate traffic, you should prioritized controlled experiments for decision making. You have a clearer idea of the effects on a macro-metric level, not just a qualitative level or a micro-metric level.

If you’re new to A/B testing, read this comprehensive guide.

I like how CXL founder Peep Laja summed it up:

Peep Laja

“How do you measure the effectiveness of user experience? It’s simple.

While some of the designers might disagree with me, I say it’s all about achieving the business objective. The user experience is a means to an end—to achieve a goal.

The simplest and most objective measure is the conversion rate—whether changes in the experience design will boost or reduce conversions. And the action of conversion might be whatever (purchase, signup, quote request, repeat logins, and so on).”

How does this factor into conversion optimization?

Better UX equals greater growth and conversion rates (usually).

Basically, a great user experience is a means to an end. You don’t create awesome user experiences just to make somebody happy. You want it to lead to something—be it sticking around on your social networking site, buying jeans on your ecommerce site, or getting the highest value out of your SaaS tool.

Whenever users land on your website, they’re having an experience. The quality of their experience has a significant impact on their opinion (‘do I like it?’), referral possibility (‘do I tweet or talk about it?’), and ultimately, conversions—will people do what we want them to do?

User experience testing is a good way to mitigate risks before investing tons of resources into designs or advertisements, or any sort of experiential design that you’re proposing. It’s usually cheaper and quicker than running experiments, and it is the perfect mechanism to create better test hypotheses (which leads to greater conversion rates, revenue, and so on).

Conclusion

User experience (usually) leads to greater conversions and revenue. So measuring and testing UX is imperative, especially at a certain point of maturity in your product design and marketing.

If nothing else, user experience testing can be a stepping stone to a solid experimentation program as you ramp up the traffic and transaction numbers to make that possible.

It’s a solid correlative micro-metric that usually leads to greater product growth. And at scale, it’s almost certain you’ll be doing some sort of UX testing.

This was an introductory guide, and I hope it put you on the right path to implementing some of it at your company.

Its really amazing blog with very much helpful information, thank you so much for writing this great blog here for us.

Awesome article. Tested USER experience of a website is a key to convert a prospect into a customer for any business online.

Great share here. We’re finding UX is a rapidly growing field in research. It helps add context to Google Analytics stats and when combined with traditional research it can provide our clients a full perspective of user experience. Thanks for sharing!

Nice tips. Liked all the points that you mentioned in the article to improve the user experience. Although all points are equally important but I like the last one. Making website mobile friendly is no more option in present scenario, it has become compulsory.