Session replays are a common conversion research technique. And they can provide a lot of value.

Still, the process is amorphous. I haven’t seen a structured way to approach session recordings other than just sitting down to watch a bunch of them and inferring your qualitative findings, somehow lopping them into the rest of your research stack.

But what if there were a better way?

Table of contents

I talked to a few conversion experts about their process for session replays and also consulted our own team’s approach. What follows may give you an idea to make your process a bit more rigorous.

What is session replay?

Session replay is the recording or reconstruction of one visitor’s journey within your site, showing their actual interactions and experience with your interface.

In session replay videos, you get to watch users go through the interface anonymously, and can therefore glean insight that may not be available from a more conscious user test or any attitudinal metrics.

Why is it important to record what your user does?

Unlike moderated user tests, session replays let you see what your users do naturally. You can’t ask them what they want; you can’t tell them what to do.

Self-reported feedback can be inaccurate. Canvas Flip wrote a Medium post where this was the case:

“My team and I recently conducted a study. The participants told us that they used a feature on a “regular” basis. But when I looked at the analytics, I witnessed a different story: they only used the feature maybe every 3–4 months.

Therefore, you have to watch them use a product and notice what they use and what they do not.”

You can run through a website with a heuristic analysis, you can measure and analyze all of the behavioral data quantitatively, you can run user tests to gain more insight – but none of those allow you to see how users actually act, at least in the completely straightforward way that a session replay video allows.

In addition, the barrier to entry with session replays is pretty low. You don’t need an advanced knowledge of the Google Analytics interface, pivot tables, cluster analysis, or anything technical to get insight from these; all you need is an eye for detail, some focus, and probably some empathy.

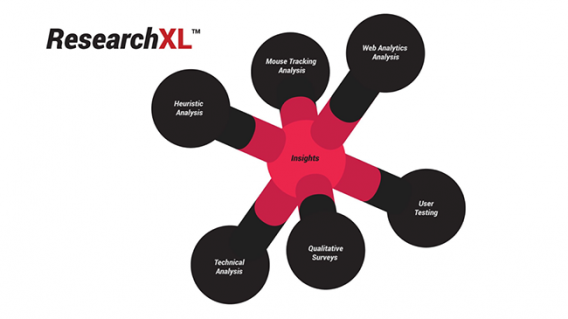

Session replay videos fit squarely into the mouse tracking analysis prong of the ResearchXL model. They’re one part of a complete conversion research audit:

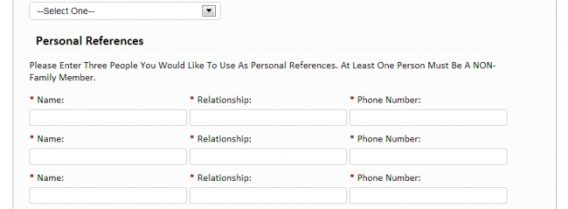

For example, one of our past clients had an online resume building service.

The process consists of 4 steps, and there was a huge drop-off in the first step. We watched videos to understand how people were filling out the form. We noticed the first step had too many form fields, and we saw that out of all the people who started filling out the form, the majority of users stopped at this question:

Personal references – the form asked for 3, but most people had none. So they abandoned the process. Solution: get rid of the references part!

Of course, the very aspects of session replays that are so cool make for some fallbacks as well…

The Problems with Session Replay Videos

The problems with session replays don’t have anything to do with the concept; watching users interact with your site is awesome and can breed some profound Aha moments in interaction design.

The problems lie in the analysis of these videos, and they generally fall into three categories:

- Quantity. There are just too many videos and not all of them have anything important.

- Inferring intent – the reasons people do things – is inherently subjective and there’s no way to calibrate accuracy.

- It’s hard to report insights in any organized way, which is really a corollary of the above point. Because of the inherent subjectivity, reporting tends to vary wildly.

The first one, quantity, can be tackled a few ways. One solution is sample. You don’t need to view them all. Divide up a few hundred videos per person on your team and analyze them.

Second, bring in the team. One person going through hundreds of videos sounds like a nightmare. Teamwork. (Though for various reasons, doing this can bring in additional problems, which we’ll talk about later).

Third, technologies are improving on their abilities to bring meaningful insight without hours of time spent manually sifting through videos. Some are looking to automatically analyze trends in user behavior and make reporting that easier. TryMyUI released the beta version of Stream, which auto-flags signs of user frustration.

The tech is being built to render the quantity factor a non-issue.

The second and third problems are more management problems, and thus less likely to be solved by AI or any type of technology fix.

Just imagine you’ve got a client and you’re doing CRO work for them. Or you’re part of an internal team looking to diagnosis some common issues and run tests to fix them.

You choose to record and view session replays, a wise choice indeed.

And you do find some fun insights! So do your 3-4 other teammates working on conversion research. You bring them to the table in the same way you wrote them down on your Notes app:

-Users looked like they were frustrated with the form. Especially when we asked for their phone number.

-People were confused when they got to the New Products page.

-So many people hit the back button after they saw our sign up form, definitely a problem.

…you get it.

Great insights, but in addition to analytics data and other forms of customer insight, how do you incorporate this into a prioritization model? How do you build this into a hypothesis? “I believe if we do [x] to [y], we’ll see an increase in conversions because…Kevin said he learned about the problem by watching videos of it?”

It doesn’t work out well if you’re trying to be rigorous. One instance of a problem viewed via session replays shouldn’t equal or outweigh 10,000 instances of a problem.

People See the Same Things Differently

If you have more than one person analyzing session replay videos, ideally it would not be in the same room at the same time.

The following quote comes from Leonard Mlodinow’s excellent book, The Drunkard’s Walk. It’s not about session replays in particular but may as well be…

Leonard Mlodinow:

“In the wine tastings I’ve attended over the years, I’ve noticed if the bearded fellow to my left mutters ‘a great nose’ (the wine smells good), others certainly might chime in their agreement.

But if you make your notes independently and without discussion, you often find that the bearded fellow wrote, ‘great nose’; the guy with the shaved head scribbled, ‘no nose’; and the blond woman with the perm wrote, ‘interesting nose with hints of parsley and freshly tanned leather.”

When you have a strong voice in the room, the rest tend to follow that voice, and you get much less variance in your data, but much less honesty in your insights.

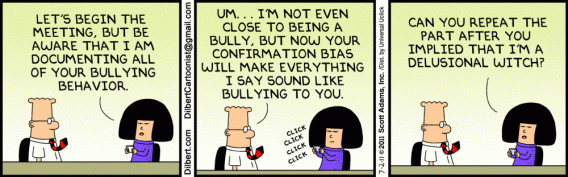

Look Out for Confirmation Bias

Confirmation bias is another problem, but this one is almost impossible to combat completely.

As Mlodinow wrote in The Drunkard’s Walk, “moviegoers will report liking a movie more when they hear beforehand how good it is.”

So if you have any preconceived ideas about your website or visitors, they’re probably leaking into your session replay analysis.

In fact, it’s one of the main problems with qualitative research like session replays or user testing: if you think that there is a certain usability issue, you’re more likely to notice it in the session replay videos.

The best defense for this is just to know that it exists and it affects you. Try to mitigate it by keeping an open mind and looking for new insights, not just those that confirm your worldview.

So session replays: They’re awesome for conversion research because you can see exactly what users do. But is there any strategic way to go about analyzing them that doesn’t introduce a bit of subjectivity?

So how can one mitigate that and even quantify insights gained from watching these? Do you put one person on the job or a committee (viewing separately of course)? Flag specific indicators and quantify via spreadsheet?

Here are some possible answers…

How to Improve Your Approach to Session Replay Videos

Standardize Your Process

The first key is to have a process that can be repeated and optimized. If you’re just randomly looking at session replay videos for vague indicators of interest of usability problems, and someone else does it each time, you’ve got a process problem.

David Mannheim, Head of Optimisation at User Conversion, put it like this:

David Mannheim

“Screen recordings are notoriously difficult to analyze because they rely so much on individual interpretation. How do you standardize that? Can you standardize that? For us, it’s about having an approach that assists that interpretation to get the most out of the screen recordings.

We start by noting down what we call ‘raw’ findings into a spreadsheet.

These could be anything – from a user’s mouse moving out of the screen in the top left to exit but remaining on the screen to reading product description copy with their mouse. Each finding has an attribute of result, template, priority, etc. meaning that when we have 50 or so findings we can easily filter and cross-pollinate to find patterns of behavior. Hey presto!”

When you conduct heuristic analysis, you’re not just vaguely approaching it with what might be your opinions. You generally operate within a framework where you’re looking for things that fit into a few key buckets – friction, clarity, distraction, etc. – and therefore the process itself is clearer.

Come up with something similar for session replay videos. Every session replay software I’ve used has a tagging system, or you could of course add a column in the spreadsheet you’re working in.

This allow you to focus on key areas to identify and it allows you to quantify how often they happen to prioritize their occurrence in a meaningful way.

One Person Owns It

I mentioned the wine problem earlier where a group of wine tasters tend to conform to the first or strongest opinion. When they separately analyze the same wine, the variance in answers is much higher.

So the thought with session replays is that if you have just one person owning the process, you avoid this sort of opinion swaying. Though this person also needs to be aware of their own cognitive biases, lest the whole optimization team takes them into their insights.

Here’s how John Hayes, Head of Marketing and Product at MyTennisLessons, approaches it:

John Hayes:

“When analyzing session replays we have found that it works best when one person takes the lead.

It is important however, that beforehand the team discusses what we are looking to ultimately glean from the sessions. Ideally, the person to churn through a few hundred sessions was not fully ingratiated in the design and development process of the pages in question as to avoid bias.

From there they will be looking for glaring errors, worrying inconsistencies or reassuring consistencies.

Reconvening with copious notes, cherry picked session examples, and logical conclusions already drawn allows us to have intelligent conversations around the sessions without too many cooks in the kitchen.”

Similarly, you could have 2-3 people view an equal number of videos separately to mitigate the bias of one person. This, however, takes more resources. But if you have the resources, it might be something to consider.

In that case, a project manager should collate and analyze the data and seek insights that are common.

Use Session Replays for Specific Goals

The assumption in this article so far has been that you are doing a sort of full conversion audit. Every page, every research method – intense and comprehensive.

But what if you’ve been optimizing for a while…Do you need to have a few team members watch a few hundred videos each month? Don’t you have a backlog of issues anyway?

(I don’t know the answers, I’m just asking the questions.)

In that case, why not use session replays when you have a specific page in mind? Better yet, when you have a specific goal or experience you’re hoping to optimize. Here’s Rahul Jain, Product Manager at VWO, talking about that:

Rahul Jain:

“You can start from analysis of all the business goals that you have.

For instance you might want to look at all the session replays of visitors who did or did not convert a certain goal.

Secondly you can look at recordings of specific funnels, i.e. you should define entry, visited and exit pages to see those specific recordings to understand exact user journey. Also, it is super useful to look at session replays of visitors who are dropping off at a certain step in funnel.”

Alex Harris, too, mentioned that he keeps it task based. As an example, if while doing funnel testing his team sees an issue or drop-off on a step, they investigate with session replay videos for further insight into why that might be happening.

Codify and Look at Variance and Patterns

Similar to attitudinal surveys, one can quantify the qualitative data that is session replay videos. TryMyUI Stream already seeks to do this in a way with their flagging of frustration signals.

But if you’re diligently tagging issues that come up, you can do it, too. The importance of this is that you can see the numbers in a more objective way, finding frequency and variance. You can possibly spot random error in how the data was collected or how the team members viewed the videos.

Here’s Mlodinow writing in The Drunkard’s Walk about the application of this to wine judging:

Leonard Mlodinow:

“The key to understanding measurement is understanding the nature of variation in data caused by random error.

Suppose we offer a number of wines to fifteen critics or we offer the wines to one critic repeatedly on different days or we do both. We can neatly summarize the opinions employing the average, or mean, of the ratings.

But it is not just the mean that matters: if all fifteen critics agree that the wine is a 90, that sends one message; if the critics produce the ratings 80, 81, 82, 87, 89, 90, 90, 90, 91, 91, 94, 97, 99, and 100, that sends another.”

In simplest terms, if everyone sees the same problem on every session replay video, it probably exists independent of random error in the data. Proceed to fixing that issue sooner than the ones that weren’t as common.

On a related note, Justin Rondeau, Director of Optimization at DigitalMarketer, talks about targeting specific actions and digging into the insights from there. Though this isn’t easy or quick, it’s valuable because of the segmentation of insights:

Justin Rondeau:

“Right now session recordings are in their infancy, right now we are just getting raw data and requiring the individual to interpret the data. In order to systematize the process more and get less subjective data recording technologies need to invest in behavioral triggering and trend identification.

Simply put, you’re never going to get away from the subjective nature of analysis. It doesn’t matter if you have a solo person spearheading the project or splitting up recordings across the team.

Here is my approach to session recordings:

Set your recording to trigger if and only if a particular action was taken on the page, e.g., button click.

You could also set a second recording up that is only if people DO NOT take that action. Though this entirely depends on what you’re trying to learn.

By setting recording criteria based on behavior, you are going to have recordings that matter rather than hundreds for recordings of a bounced visit.

When you have the recordings don’t forget to segment! Just like any analysis you’d do in Google Analytics you need to dig into the technologies filters to find the recordings that matter. Your goal is to get rid of the garbage and to save your time.

Another cool thing I see coming is a recording trend report, currently in Alpha from TruConversion. The goal for this is to find patterns in the recordings and significantly cut down analysis time.

Even without that trend feature, you can significantly cut down on your session recording analysis time by not just triggering based on page view and with advanced filters during the review process.”

Conclusion

Session replays, like any qualitative information, shouldn’t be a crutch to lean on. Rather, they should illuminate the customer experience by showing users interacting in a more natural way.

Still, analyzing these videos proves difficult. Interjecting bias is easy, and it only multiplies as more team members offer their own takes, especially when you don’t have a documented process.

Therefore, it’s essential to processize this in some concrete way if you hope to get value out of session replays.

We mentioned a few ways above, but I’m sure you could build out your own process. The point is, you should seek to eliminate as much ambiguity as possible, and make sure everyone is judging by the same rules/criteria.