How To Visualize A/B Test Results

You may be wondering, “why should I make my own visualization of my A/B test results?”

Because the A/B testing tools in the market already provide you all the necessary tables and graphs, right? They tell you when an A/B test is significant and what the expected uplift is. So why bother?

The thing is, these tables and graphs are comprehensible when you – a data driven analyst – take a look at them. However, if you’re the manager that bumps into them as a screenshot in an email or presentation document, then they aren’t that clear at all.

Moreover, you should always analyze your A/B tests in your analytics tool instead of in your A/B testing tool. Problematically, your analytics tool doesn’t tell you whether or not your A/B-test was a significant success. It doesn’t provide you the statistical significance (p-value), power level and error margins. You need to calculate and visualize this yourself.

Table of contents

Finding a Clear Way To Visualize A/B Test Results

Problem is, it’s tricky coming up with an A/B test visualization that everyone gets. However, we’ve gone through the process and have come up with a clear way to do A/B test visualizations.

If you follow, hopefully it will result in a graph that positively convinces everyone of your awesome job. You’ll be able to easier convince people that you need more resources for A/B testing. A good graph doesn’t just look nice, but it helps you to get the message across and boosts the credibility of your A/B test results.

1st Try: Why Reinvent The Wheel?

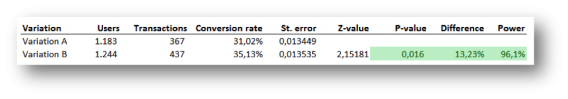

In the first couple of months when we started analyzing the results of A/B tests ourselves (in a spreadsheet), we used no graph at all. We just presented a table with the main results. Being good statisticians we included the standard error, the z-value, the p-value and the power. So at least we knew for sure that no one would understand it☺.

Oh well, we did use color coding; green meant we have a winning result!

Next, we added a graph to bring these boring and difficult numbers to life.

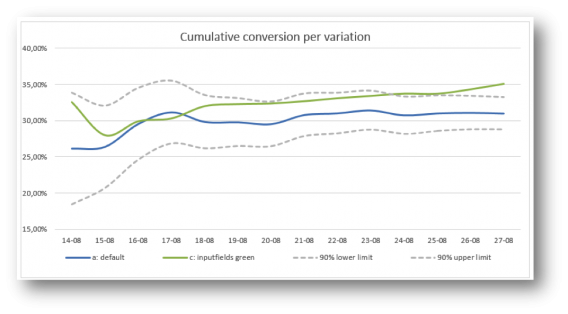

We started by copying what every other A/B test tool reports: a graph with the conversion rate per day, accumulated over time. We do added two lines: representing the 90% lower and upper limit of the default variation (computed by the conversion rate of the default variation plus/minus the critical z-value times the standard error).

The main problem we ran into was the simple fact that conversion over time doesn’t really give us an insight.

This graph tells you whether there’s a significant and stable effect, but that’s only interesting to the web analyst. Others are, of course, only interested in the main conclusion: does this A/B test have a significant winner and could we make a big positive impact on our KPI’s when we implement it? This graph didn’t give a clear answer to that question:

2nd Try: Cut to the Chase

We wanted to get rid of the graph with the conversion rate over time and only show the main conclusion. This meant clearly visualizing the last data points of the graph: the conversion rates of variation A and of variation B.

In addition, the 90% lower and upper limit (the confidence interval) of both variation A and variation B were needed to see whether the difference is significant.

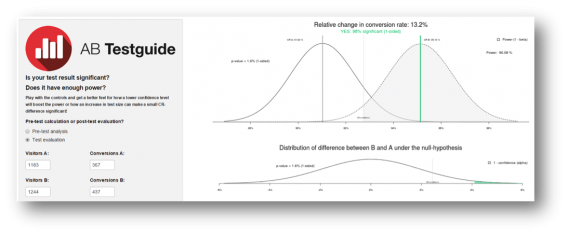

Basically, we wanted to replicate what statisticians use to visualize a test: two bell curves, a critical value and a shaded area (as used in the A/B test calculator of ABtestGuide or CXL’s AB test calculator), but more comprehensibly presented. Problem is, this bell curve visualization won’t get the message across in a persuasive and clear way to your company. It simply looks too difficult:

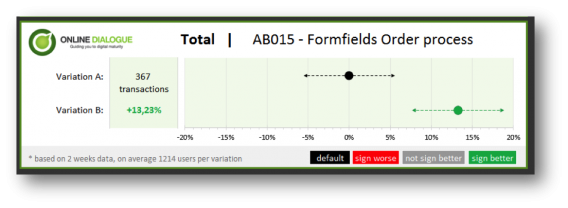

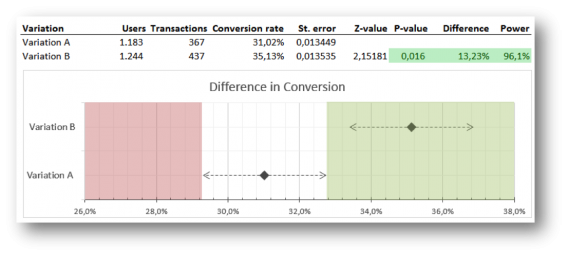

This is what we came up with to overcome that problem:

What you see are two clear points representing the conversion rate of variation A and B.

The dotted lines are the confidence intervals of them both. If the conversion rate of variation B lies outside the reach of the confidence interval of A, variation B is significantly better. The green shaded area accentuates this. A dot in the red area would mean that the variation is performing significantly worse.

One problem: the graph wasn’t fully automated.

The graph could easily be refreshed by changing the users and transactions for each variation (and thereby automatically changing the conversion rates, standard errors, z-value, p-value and power), but the shaded green and red areas had to be adjusted by hand.

No biggy if you’re running few tests, but if your job is analyzing more than 10 A/B-tests a week, this becomes really annoying. Another minor issue was that the graph still looked too nerdy. It looked like something you had to draw for your mathematics homework ☺.

3rd Try: “You’re Doing It All Wrong!”

On a Friday afternoon, we sat down with the data scientist that programmed the R calculations of the ABtestguide calculator and showed him our visualization. We consulted him, because the calculation of power wasn’t accurate and we didn’t know what was wrong with it (it took some time, but we found the small error in the formula).

However, the conversation soon turned to the visualization at hand. According to him – and probably the whole scientific community – our visualization wasn’t scientifically correct ☹.

Fixing Our Inaccuracy

Basically, we displayed the two bell curves in a one-dimensional graph and used this to verify whether the A/B test was significant or not. However, the correct way would have been to show the graph of the distribution of a difference between variation B and variation A (the lower graph of the ABtestguide calculator).

This distribution can be calculated by computing the standard error of a difference (take the root of the sum of the squared standard errors of variation A and variation B) and use this in the calculation of one overall confidence interval.

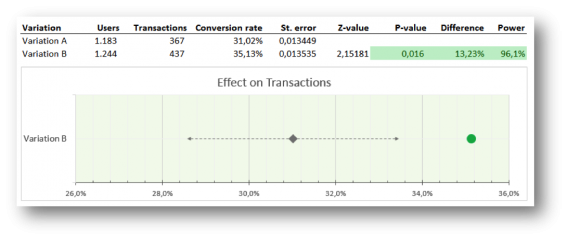

Great, now the calculation was correct, but consequently it had a huge impact on our visualization. We no longer could present a single row for each variation, but we had to merge the two to represent the distribution of a difference between variation A and B, so we did:

Here’s what this graph can tell you: if the conversion rate of variation B lies within the confidence interval, this variation isn’t significantly better; if it lies outside the confidence interval, it is, and the dot turns green (positive) or red (negative).

Now Our Graph and Table Are Automated

The problem with our previous visualization was the lack of automation. This graph and table solved that. We just had to import the data through the analytics API (the users and transactions per variation) and bang…the results and graph were ready!

That seemed like a dream come true, but when we started using this visualization with a couple of our clients we soon noticed that we still had to explain (even more than before) what they were seeing in this graph…

Our Graphs Still Weren’t Fully Communicating Results Across Teams

Especially, with multiple variations in the A/B test the graph becomes confusing. A test with a default and 3 variations would show 3 rows with grey diamonds and grey dotted lines (the confidence intervals) surrounding them that were all just slightly – barely noticeably – different, because all the standard errors of a difference between the variations and the default are usually roughly the same.

People were puzzled as to why there were only 3 lines and not 4: what happened to the default variation? And every row looked basically the same, so it felt like the impact of winners wasn’t that big.

Hmm…we just woke up from our perfect dream. Back to the drawing board.

4th Try: Stepping Back in History, But Now Automated!

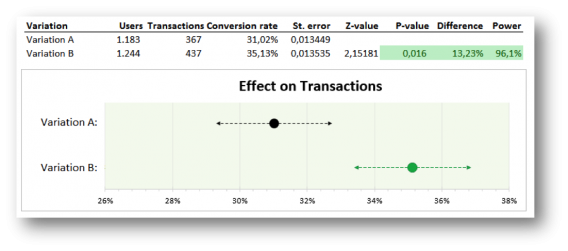

So, we went back to our visualization of our 2nd try – one row for every variation. We know this might not be the perfect scientifically correct way of displaying results, but it is understandable and that was our main goal. We did keep the new design and sharpened it a bit by using dots instead of geeky diamonds. And by looking into all the possible features of graphs in Excel we came up with a way to automate this kind of visualization too…yeah!

Before we went ahead and used this visualization with our Online Dialogue clients (and confuse them once more with a different A/B test visualization), we consulted our own colleagues.

We found out that the graph was very clear to all participants in our A/B test teams, but stakeholders, managers and other important people, not directly involved in testing, could still have many questions and/or remarks:

- “Why is the conversion rate in this graph thirty something percent? I thought we had a 3% conversion rate on our digital channels?” Naturally, the graph is based on the actual conversion rate through that page. You know that it’s different for every page and that your sales funnel can have a thirty something percent conversion rate, but they don’t.

- “I just want to know how big the uplift is.” The graph tells you that there is something green going on, so something ought to be good, but what’s the exact effect? They can’t find the 13.23% uplift. They have to read the table to get all the necessary information.

- And if they do notice the 13.23% uplift, you might get: “Wow, 13% more sales! You’re my hero – let’s put it live now!”. They should, but you’d have to explain it was an A/B test on a page of the website that only leads to 367 conversions a week, not the 8,000 conversions you have on the whole website. So, yes it had 13.23% uplift through that part of the website, but it probably will only have an effect of 1% or so on total conversions.

5th Try: We’re Getting Closer Baby

These questions and remarks were taken care of in our next (and so far final) visualization. Besides a visual update to make this visualization client and Online Dialogue PR friendly we made 2 major changes:

- We decided to change the x-axis so it no longer reports on the conversion rate through that page, but on the relative difference it makes. So the default is set on 0% difference, while the variation may bring a certain increase (or decrease)

- We put the number of conversions of the default in front of the default graph row. For every variation we added the relative change in conversion rate in front of the graph. We also automatically color-coded the difference: when the difference in conversion rate is significantly better the number turns green, when the difference is significantly worse, the number turns red.

With this visualization you will be able to see the uplift and the expected impact immediately. We also added the number of test weeks and the average population per variation – so the data analyst can still feel confident with the results. The analyst could also easily explain that with a 90% certainty the increase in conversion after implementation will be somewhere between 7.5% and 19%.

We now think this visualization offers enough information to convince the manager and the analyst – so we got rid of the table (which is still there when the analysts in the team open the Excel of course – but it’s removed from the PowerPoint, result email and/or PDF).

The Online Dialogue clients are happy with this new visualization and at Testing.Agency we already hired a developer to automate this visualization based on data from the Google Analytics API, but we think there’s still room for improvement.

Conclusion: What Are Your Thoughts?

Though A/B testing tools provide graphs and charts, it’s important to create A/B test visualizations the whole team understands. We’ve gotten to the point where we’re conveying our results clearly, but we’d like to get even better at it.

Something we know we want to do is to give way more focus to the real possible impact once you implement a winning variation on your website. How many conversions or revenue will it add? We already know that not every page reaches all visitors and therefore not all conversions.

Moreover, sometimes tests aren’t run on 100% of the population on that page. Consequently, this makes the reported number of transactions in the default less important – and possibly even confusing – to managers. They just want to know the number of extra conversions every month once this new variation is implemented and what that means for the uplift of total revenue. We may need that in our visualization.

So, according to you, what else is missing? What would you change?

Thank you for reading our quest and join in by adding your feedback below, thanks! You can download our Excel template by clicking here.